C++ APIs

The Palette software provides a set of C++ functions for a third-party AI developer to seamlessly integrate the SiMa MLSoC device (connected in PCIe mode) into their solution. Using the C++ API functions a developer can detect, enumerate, and open a device, load and unload/remove a model, run synchronous and asynchronous inference, and finally close a device.

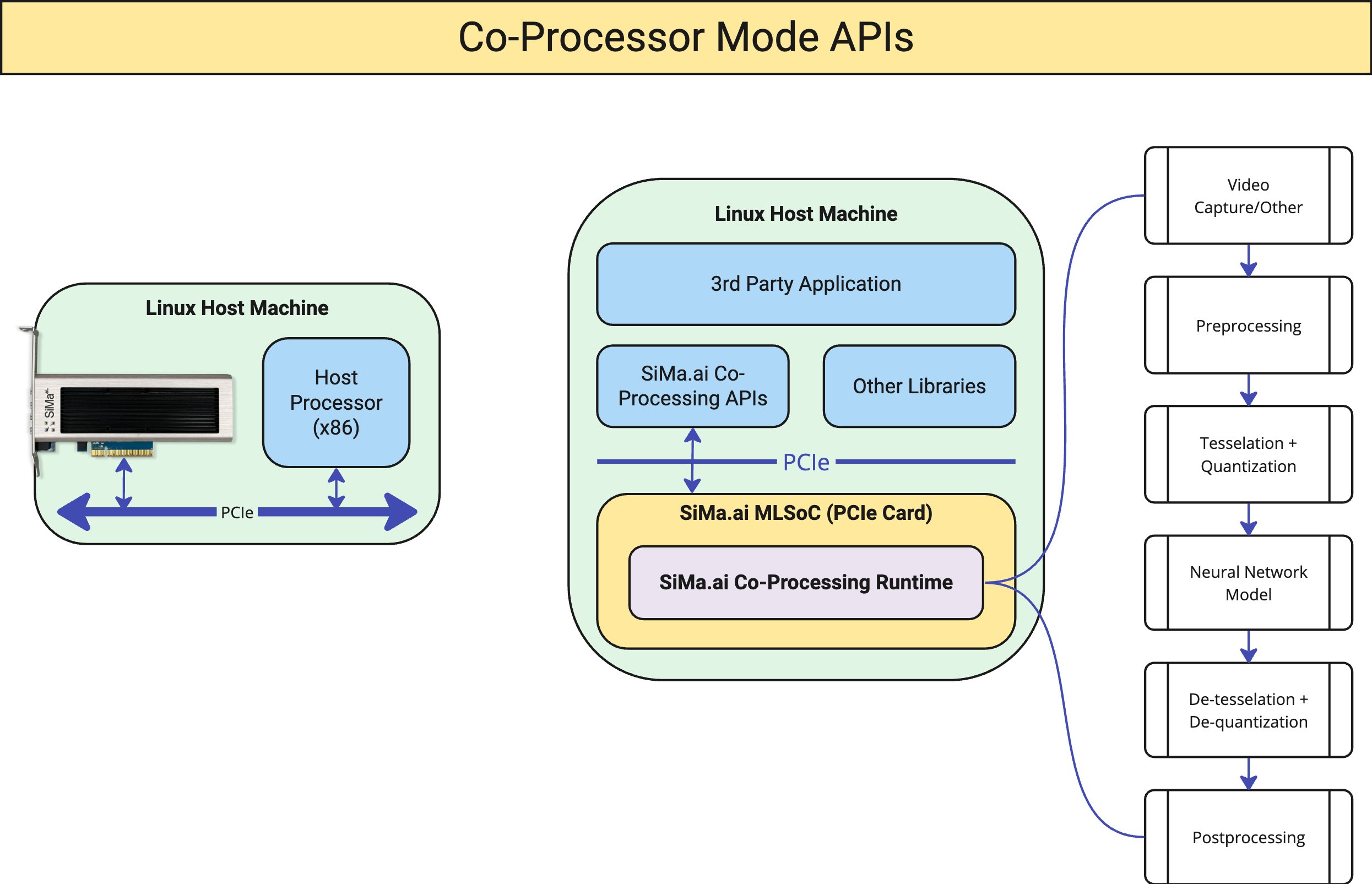

Co-Processor Mode

In co-processor mode, the SiMa.ai MLSoC can be paired with a host system through PCIe and a host CPU can offload portions of the ML application to the MLSoC. The APIs are integrated into the host C++ application, which will then communicate with the MLSoC through PCIe.

Note

Currently, this mode only supports running inference tasks on the Machine Learning Accelerator (MLA) of the MLSoC (quant -> NN Model -> dequant), also any valid GStreamer PCIe pipeline can be supported by manually generating the MPK in the SDK with support from us. On a subsequent release it will contain the ability to fully leverage all the IPs and run the pre & post processing operations on the MLSoC.

Board Setup via PCIe Interface

The following pre-requisites must be in place before you begin board bring-up. For instructions on how to bring up the MLSoC device/board in PCIe mode, see Setting Up the Board in PCIe Mode.

Pre-requisites

Make sure the MLSoC device has been updated with the latest firmware, using the CLI SDK.

Install the package

sima_pcie_host_pkg.sh, included inMLSoC1.4_Firmware.tarin the Palette software, using the below command. Make sure you have the appropriate sudo permissions on your host machine. See Setting Up the Board in PCIe Mode.The host package will install the device driver, GStreamer plugins, C++ and C library used to communicate with the SiMa Controller.

- The examples provided with the functions can be successfully built and tested by using the correct model

(.mpk)file. Additionally, the example inputs and outputs along with the model file can also be used to build/test.

- The examples provided with the functions can be successfully built and tested by using the correct model

Running an Example Pipeline in PCIe Mode (ResNet50)

Follow the steps in this section to compile a model and run the resulting application on the MLSoC device connected over PCIe.

Pre-requisites

An Ubuntu 22.04 host computer with four Gen3/4 PCIe slots.

An MLSoC device connected via PCIe. For instructions on Board Bring-up in PCIe Mode, see Setting Up the Board in PCIe Mode.

Palette software installed on your host computer. See Palette Software Installation, earlier in this document.

- Install the OpenCV C++ library using the package manager.

sima-user@sima-user-machine:~$ sudo apt-get update sima-user@sima-user-machine:~$ sudo apt install -y libgtk2.0-dev sima-user@sima-user-machine:~$ sudo apt-get install -y libopencv-dev

Steps

Compile a model using the Palette software’s ModelSDK tool and the appropriate calibration dataset to generate a

tar.gzfile. Download the below listed files and follow the setup instructions to compile a ResNet50 model.Compilation script

resnet50_compile_script.tar.xz.Calibration dataset

calibrate.tar.xz.Test images

test_images.tar.xz.

Copy the files to the shared folder between the Palette CLI SDK and your host machine, for the commands we will be using the default folder called

workspace, if you changed the name of your shared folder replace it:sima-user@sima-user-machine:~$ cd sima-user@sima-user-machine:~$ mkdir workspace sima-user@sima-user-machine:~$ cd workspace && mkdir resnet50 && cd resnet50 sima-user@sima-user-machine:~/workspace/resnet50$ mv ~/Downloads/resnet50_compile_script.tar.xz ./ sima-user@sima-user-machine:~/workspace/resnet50$ mv ~/Downloads/calibrate.tar.xz ./ sima-user@sima-user-machine:~/workspace/resnet50$ mv ~/Downloads/test_images.tar.xz ./ sima-user@sima-user-machine:~/workspace/resnet50$ tar -xvf resnet50_compile_script.tar.xz sima-user@sima-user-machine:~/workspace/resnet50$ tar -xvf calibrate.tar.xz sima-user@sima-user-machine:~/workspace/resnet50$ tar -xvf test_images.tar.xz

Start the SDK Docker to compile your model.

The compilation script

resnet50_compile_script.tar.xzwill run the following tasks:Download the ReseNet50 model.

Quantize the model.

Test the quantized model.

Compile the model and save the output to resnet50_mpk/resnet50.tar.gz. This output file will be used to create the MPK file as described below.

sima-user@sima-user-machine:~/Downloads/1.4.0_master_B1230/sima-cli$ python3 start.py Checking if the container is already running... ==> Container is already running. Proceeding to start an interactive shell. sima-user@docker-image-id:/home$ cd /home/docker/sima-cli/resnet50 #Go to the resnet50 path inside the SDK sima-user@docker-image-id:/home/docker/sima-cli/resnet50$ python3 download_and_compile_resnet50.py SiMa Model SDK tutorial example of ONNX YoloV5 -- Mixed graph of MLA + EV74 + A65 Model SDK version: 1.4.0 Downloading model... Model downloaded successfully! Input Names: ['data'] Loading model resnet50_v2.onnx Using dataset: ./calibrate/* Number of inputs in the dataset: 70 Input shape: (1, 224, 224, 3) Quantizing the model ... Running calibration ...DONE 2024-05-13 04:25:54,990 - afe.ir.defines - WARNING - In node MLA_0/conv2d_add_relu_1, Precision of weights was reduced to avoid numeric saturation. Saturation was detected in the bias term. Running quantization ...DONE Using dataset: ./test_images/* Testing Quantized model: Folder './resnet50_v2-results' already exists. Ignoring creation. Img: ./test_images/000000009448.jpg label: 879: 'umbrella', Img: ./test_images/000000007784.jpg label: 701: 'parachute, chute', Img: ./test_images/000000001584.jpg label: 874: 'trolleybus, trolley coach, trackless trolley', Img: ./test_images/000000000785.jpg label: 795: 'ski', Img: ./test_images/000000011813.jpg label: 872: 'tripod', Img: ./test_images/000000007108.jpg label: 385: 'Indian elephant, Elephas maximus', Img: ./test_images/000000002006.jpg label: 874: 'trolleybus, trolley coach, trackless trolley', Img: ./test_images/000000006894.jpg label: 385: 'Indian elephant, Elephas maximus', Compiling the model ... MPK JSON with LM/SO files are generated in folder ./resnet50_mpk End of tutorial example of ONNX ResNet50.

Creating an MPK

Using the MPK parser tool create an MPK file from the compiled model

resnet50.tar.gz. Please run the below commands inside the SDK docker.Edit the sample_cfg.json file to modify the parameters shown below. The sample_cfg.json file is in

/usr/local/simaai/utils/mpk_parser/sample_cfg.json.sima-user@docker-image-id:/home/docker/sima-cli/resnet50$ vi /usr/local/simaai/utils/mpk_parser/sample_cfg.json { "img_width": 224, "img_height": 224, "input_width": 224, "input_height": 224, "output_width": 224, "output_height": 224, "input_depth": 3, "keep_aspect": 0, "norm_channel_params": [[1.0, 0, 0.003921569], [1.0, 0, 0.003921569], [1.0, 0, 0.003921569]], "normalize": 1, "input_type": "RGB", "output_type": "RGB", "scaling_type": "INTER_LINEAR", "padding_type": "CENTER", "ibufname": "input" }

Run the python script with the required parameters as shown below.

sima-user@docker-image-id:/home/docker/sima-cli/resnet50$ python3 /usr/local/simaai/utils/mpk_parser/m_parser.py -targz ./resnet50_mpk/resnet50_mpk.tar.gz -project /usr/local/simaai/app_zoo/Gstreamer/CPP_API_TestPipeline -cfg /usr/local/simaai/utils/mpk_parser/sample_cfg.json File preproc.json copied to /usr/local/simaai/app_zoo/Gstreamer/CPP_API_TestPipeline/plugins/pre_process/cfg File postproc.json copied to /usr/local/simaai/app_zoo/Gstreamer/CPP_API_TestPipeline/plugins/post_process/cfg File mla.json copied to /usr/local/simaai/app_zoo/Gstreamer/CPP_API_TestPipeline/plugins/process_mla/cfg File ./sima_temp/resnet50_stage1_mla.lm copied to /usr/local/simaai/app_zoo/Gstreamer/CPP_API_TestPipeline/plugins/process_mla/res/process.lm File ./sima_temp/resnet50_mpk.json copied to /usr/local/simaai/app_zoo/Gstreamer/CPP_API_TestPipeline/resources/mpk.json ℹ Step a65-apps COMPILE completed successfully. ℹ Step COMPILE completed successfully. ℹ Step COPY RESOURCE completed successfully ℹ Step RPM BUILD completed successfully. ✔ Successfully created MPK at '/home/docker/sima-cli/resnet50/project.mpk' MPK Created successfully.

This project.mpk will be used in CPP application.

Compiling and Running the Application Pipeline

Once the ResNet50 MPK is created, the application is loaded and run on the MLSoC device. Executing the MPK includes the following tasks:

Read the image → Pre-process (resize, normalise) → MLSoC CPP Sync Inference → post-process (argmax) → Overlay → save output.

Save the output images in the output folder.

Compile the example application on the Host PC outside the Docker SDK.

You can download the Resnet50 example

resnet50_pcie_application.tar.xz.sima-user@sima-user-machine:~/workspace/resnet50$ tar -xvf resnet50_pcie_application.tar.xz CMakeLists.txt main.cpp resnet50_project.mpk sima-user@sima-user-machine:~/workspace/resnet50$ mkdir build && cd build sima-user@sima-user-machine:~/workspace/resnet50/build$ cmake .. -- The C compiler identification is GNU 11.4.0 -- The CXX compiler identification is GNU 11.4.0 -- Detecting C compiler ABI info -- Detecting C compiler ABI info - done -- Check for working C compiler: /usr/bin/cc - skipped -- Detecting C compile features -- Detecting C compile features - done -- Detecting CXX compiler ABI info -- Detecting CXX compiler ABI info - done -- Check for working CXX compiler: /usr/bin/c++ - skipped -- Detecting CXX compile features -- Detecting CXX compile features - done -- Found OpenCV: /usr/local (found version "4.6.0") -- Configuring done -- Generating done -- Build files have been written to: /home/sima-user/workspace/resnet50/build sima-user@sima-user-machine:~/workspace/resnet50/build$ make [ 50%] Building CXX object CMakeFiles/test_img.dir/main.cpp.o [100%] Linking CXX executable test_img [100%] Built target test_img

Execute the sample application.

sima-user@sima-user-machine:~/workspace/resnet50/build$ ./test_img ./../project.mpk #for testing only the application use ./../resnet50_project.mpk Directory created or already exists: ./../output SiMaDevicePtr for GUID : sima_mla_c0 is : 0x5627ab5bf9b0 sima_send_mgmt_file: File Name: ./../project.mpk sima_send_mgmt_file: File size 20184712 sima_send_mgmt_file: Total Bytes sent 20184712 in 1 seconds Opening /dev/sima_mla_c0 si_mla_create_data_queues: Data completion queue successfully created si_mla_create_data_queues: Data work queue successfully created si_mla_create_data_queues: Data receive queue successfully created loadModel is successful with modelPtr: 0x5627ab5c8b00 runInferenceSynchronousloadModel_cppsdkpipeline modelPtr->inputShape.size:1 modelPtr->inputShape: 224 224 3 modelPtr->outputShape.size:1 modelPtr->outputShape: 1 1 1000 total Images:7 ./../test_images/000000009448.jpg starting the run Synchronous Inference Time taken per iteration: 378 milliseconds Predicted label: 879: 'umbrella', Image with predicted label saved to: ./../output/000000009448.jpg ./../test_images/000000007784.jpg starting the run Synchronous Inference Time taken per iteration: 4 milliseconds Predicted label: 701: 'parachute, chute', Image with predicted label saved to: ./../output/000000007784.jpg ./../test_images/000000001584.jpg starting the run Synchronous Inference Time taken per iteration: 3 milliseconds label: 874: 'trolleybus, trolley coach, trackless trolley', Image with predicted label saved to: ./../output/000000001584.jpg ./../test_images/000000000785.jpg starting the run Synchronous Inference Time taken per iteration: 3 milliseconds Predicted label: 795: 'ski', Image with predicted label saved to: ./../output/000000000785.jpg ./../test_images/000000011813.jpg starting the run Synchronous Inference Time taken per iteration: 3 milliseconds Predicted label: 872: 'tripod', Image with predicted label saved to: ./../output/000000011813.jpg ./../test_images/000000007108.jpg starting the run Synchronous Inference Time taken per iteration: 3 milliseconds Predicted label: 385: 'Indian elephant, Elephas maximus', Image with predicted label saved to: ./../output/000000007108.jpg ./../test_images/000000002006.jpg starting the run Synchronous Inference Time taken per iteration: 3 milliseconds Predicted label: 874: 'trolleybus, trolley coach, trackless trolley', Image with predicted label saved to: ./../output/000000002006.jpg ./../test_images/000000006894.jpg starting the run Synchronous Inference Time taken per iteration: 3 milliseconds Predicted label: 385: 'Indian elephant, Elephas maximus', Image with predicted label saved to: ./../output/000000006894.jpg Average time per iteration/ inference: 2.95312 ms unloadModel for modelPtr : 0x5627ab5c8b00 successfully Sima Device with SiMaDevicePtr:0x5627ab5bf9b0 closed successfully

Supported C++ API Functions

C++ API |

Description |

|---|---|

|

SiMa MLSoC device class. |

|

Gets guid GUID of the device to be opened. |

|

Initializes a SiMa.ai MLSoC device and provides a shared pointer for the instantiated device. |

|

Accepts the shared pointer of a device and a SiMaModel as inputs, facilitating the

loading of the model onto the specified device and preparing it for inference. The function

returns a shared pointer for the loaded model, which is subsequently utilized in the

inference API.

|

|

Executes inference synchronously. Requires a shared pointer to the loaded model,

alongside input and metadata, as well as the address of the output tensor. Subsequent

to the inference process, the output tensors will be positioned at the specified location.

|

|

Executes inference asynchronously. In addition to the loaded model shared pointer,

input, and metadata, it requires the specification of a callback function that is triggered

upon completion of the inference process.

|

|

Unloads a previously loaded model from the device. |

|

Checks if a particular model has been loaded on the device and is still

considered loaded from host perspective. Will only become false under

unload()call or resetting of device.

|

|

Checks if device is already open. |

|

Resets any queues that are associated with device pointer. Any outstanding

requests will be flushed.

|

|

Closes a previously opened device. |

|

Resets the device entirely, completly clearing out any running services and models. |