GStreamer Example Pipelines

To get started with GStreamer based Application development on the SiMa hardware, developers need to have a foundational understanding of GStreamer and in particular the gst-launch utility. The Applications are deployed on the SiMa.ai hardware as pipelines formed by a combination of open sourced GStreamer plugins and SiMa proprietary plugins and are executed with the gst-launch utility.

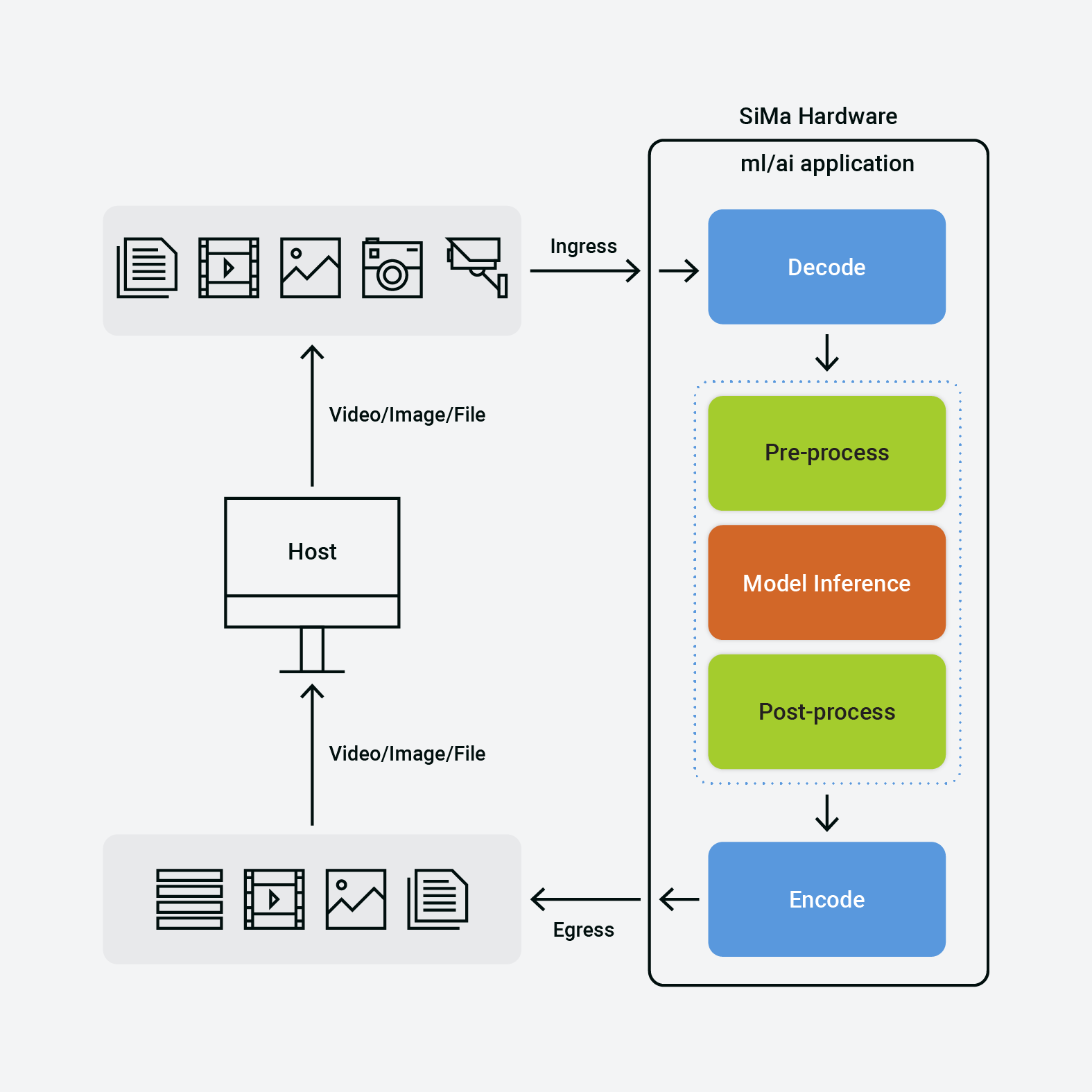

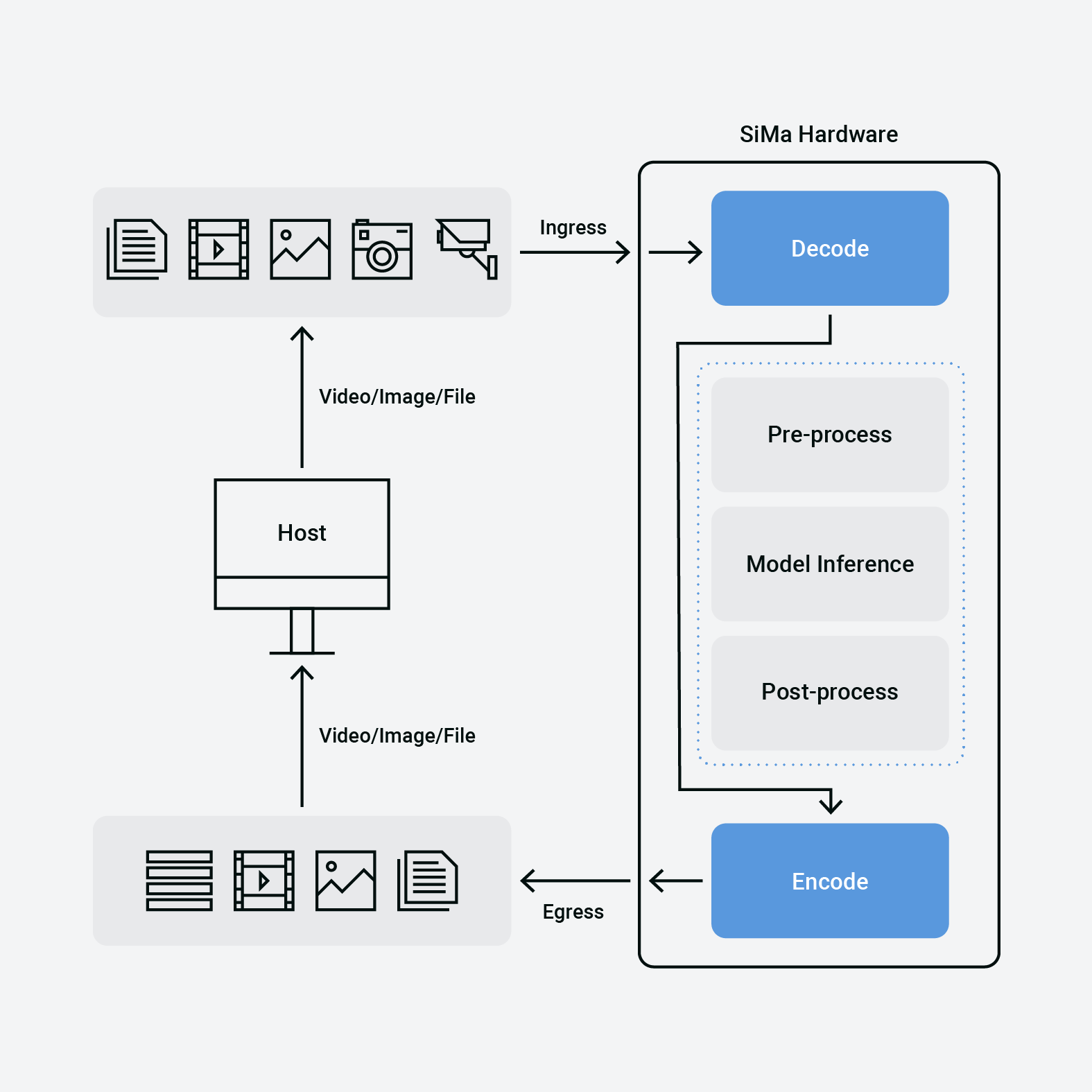

The block diagram below captures a simplistic view of the set up any Developer would be working with for iterative development. The two main components of the setup are as follows:

Host

The Host machine (running the SiMa.ai supported OS) is where the developers install the latest Palette SDK and then securely connect to the SiMa.ai hardware to get started with iterative application development and eventually deploying to the board.

SiMa.ai Hardware

The SiMa.ai hardware refers to the boards of all form factors.

Application Overview

Ingress - captures all supported inputs which can be streamed to the SiMa hardware for processing

Egress - captures all supported output formats which can be streamed out of the SiMa hardware

Decode/Encode - captures the multimedia decoding and encoding for the processing

Model Compute:

pre process - refers to the compute required by the model to be able to run the inference

model inference - refers to the actual inference which happens on the pre processed data

post process - refers to the compute on the data post the inference

Before we dive into GStreamer development with the MPK toolchain, we will run our first Hello World application based on GStreamer directly on the board. This warm-up exercise will introduce the Developer to the following:

The (ML/AI) Application as a GStreamer pipeline with both open sourced and SiMa plugins.

Running the GStreamer pipeline using the gst-launch utility on the SiMa hardware.

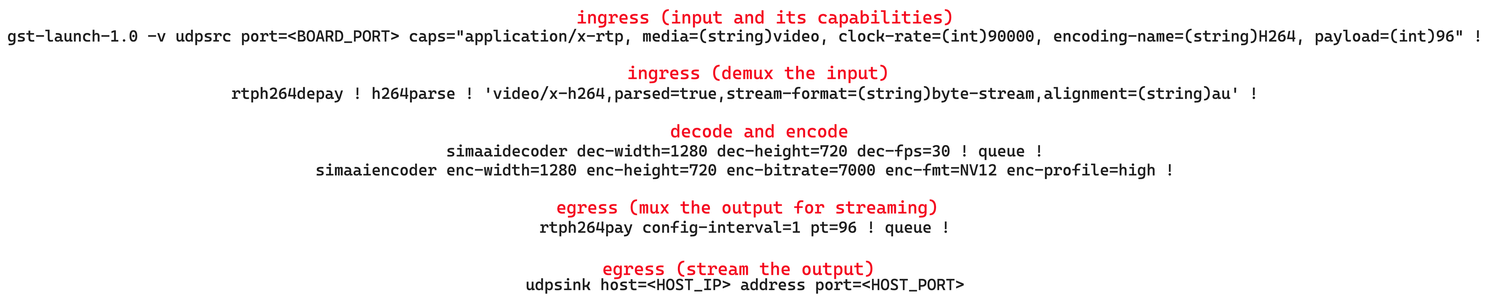

Mapping the

gst-launchstring to bigger building blocks of an Application (Ingress, Decode, Pre-Process, Model Inference, Post-Process, Egress)

All of this will help you better understand the example GStreamer pipelines shipped as part of the Palette SDK, modify them if needed and eventually build custom pipelines to be deployed on the board.

Since the Hello World application will be directly run on the board, make sure you have reviewed the instructions described earlier in this documentation and are able to securely login into the SiMa hardware and access the command terminal.

Running Gstreamer Pipelines

Pre-requisites

The following requirements must be met before you run any application pipeline.

Palette Software is already installed on your host machine by following Software Installation.

GStreamer is already installed on your host machine by following GStreamer installation.

You are already running the Palette CLI using

python3 start.pyand you are in the Docker terminal.A device is connected to your host machine using an Ethernet or PCIe setup.

Hello World Application

Steps

Using the GStreamer command(s), follow the steps below.

Run on the Host machine to stream a sample test video to the SiMa hardware.

sima-user@sima-user-machine:~$ sudo gst-launch-1.0 -v videotestsrc ! videoscale ! video/x-raw,width=1280,height=720, format=NV12 ! x264enc ! rtph264pay ! queue ! udpsink host=<BOARD_IP> port=<BOARD_PORT>

Run on the board to implement the Hello World application.

sima-user@sima-user-machine:~$ sudo gst-launch-1.0 -v udpsrc port=<BOARD_PORT> ! application/x-rtp,encoding-name=H264,payload=96 ! rtph264depay ! h264parse ! 'video/x-h264,parsed=true,stream-format=(string)byte-stream,alignment=(string)au' ! simaaidecoder dec-width=1280 dec-height=720 dec-fps=30 ! queue ! simaaiencoder enc-width=1280 enc-height=720 enc-bitrate=7000 enc-fmt=NV12 enc-profile=high ! rtph264pay config-interval=1 pt=96 ! queue ! udpsink host=<HOST_IP> port=<HOST_PORT>

Run on the Host machine to receive the decoded stream from the board.

sima-user@sima-user-machine:~$ GST_DEBUG=0 sudo gst-launch-1.0 udpsrc port=6000 ! application/x-rtp,encoding-name=H264,payload=96 ! rtph264depay ! 'video/x-h264,stream-format=byte-stream,alignment=au' ! avdec_h264 ! autovideosink

Description

The Application pipeline running on the board receives a sample test video pattern from the Host machine.

The udpsrc GStreamer plugin receives the input video, demuxes the input, and passes it to the decoder.

The decoder is appropriately configured with resolution and the frame rate to decode NV12 video frames from the incoming encoded video bitstream.

Then the encoder is configured as required and the decoded video frames are encoded and streamed out of the board back to the Host machine.

Next Steps

We ran a sample GStreamer based application as a pipeline directly on the board using the gst-launch utility. The Application introduced the Allegro multimedia codec hardware on the SiMa board and configuring the same within the gst-launch string to decode and encode multimedia data on the board.

We also broke down the command running on the board and mapped them to the building blocks of an Application followed by a detailed description of the functionality.

Once there is a good grasp on breaking down the example gst-launch command lines and mapping them by identifying the building blocks accurately the exercise can be extended to modify the building blocks, one at a time.

For example, different ingress paths can be tried like the onboard camera on the host machine or a prepackaged video. Different egress paths can be tried as well streaming the output to a different port or to another machine. It is crucial to ensure the resolutions and formats and color spaces match the hardware specifications to avoid running into codec compatibility errors and also using the GStreamer plugins corresponding to the input or the output paths.

Real-World Applications

The workflow for building and deploying real-world end-to-end application pipelines on the SiMa hardware using the Palette SDK involves the MPK toolchain.

Applications are deployed in a MPK bundle to the SiMa board and the application.json file in that package contains the gst-launch command pertaining to the application being deployed. You also get access to optimized and prepackaged pipelines in the Palette SDK which is shipped as a Docker container once you successfully walk through the steps outlined in the Quick Start Guide.

We can proceed with breaking down the command line and identifying the building blocks and describing the functionality of one of the prepackaged pipelines - OpenPose Application.

Running Application Pipelines Single Command (Beta)

Prerequisites

You must have installed ffmpeg in your host system.

Your board must be reachable from the host device through SSH.

Install the following python libraries:

sima-user@sima-user-machine:~$ pip install paramiko sima-user@sima-user-machine:~$ pip install tqdm

Unzip and place the file

pipeline_runner.zipunder your workspace folder:sima-user@sima-user-machine:~$ cd ~/Downloads sima-user@sima-user-machine:~/Downloads$ unzip pipeline_runner.zip sima-user@sima-user-machine:~/Downloads$ mv pipeline_runner ~/workspace

Run the script from within the folder:

sima-user@sima-user-machine:~$ cd ~/workspace/pipeline_runner sima-user@sima-user-machine:~/workspace/pipeline_runner$ python3 pipeline_runner.py -h usage: pipeline_runner.py [-h] -hi HOST_IP_ADDRESS -b BOARD_IP_ADDRESS [-r RECEIVER_PORT] [-rstp RSTP_PORT] [-n {people-tracker,open-pose,yolov5,yolov7,efficientdet,detr}] [-d PIPELINE_DIR] [-f VIDEO_FILE] Demonstration of running pipelines automatically optional arguments: -h, --help show this help message and exit -hi HOST_IP_ADDRESS, --host-ip-address HOST_IP_ADDRESS IP Address of your host machine -b BOARD_IP_ADDRESS, --board-ip-address BOARD_IP_ADDRESS IP Address of your SiMa board -r RECEIVER_PORT, --receiver-port RECEIVER_PORT Port to receive the stream from the board -rstp RSTP_PORT, --rstp-port RSTP_PORT Port to host the rstp stream -n {people-tracker,open-pose,yolov5,yolov7,efficientdet,detr}, --pipeline-name {people-tracker,open-pose,yolov5,yolov7,efficientdet,detr} Pipeline name -d PIPELINE_DIR, --pipeline-dir PIPELINE_DIR Path to the folder where the pipelines are contained -f VIDEO_FILE, --video-file VIDEO_FILE Video file path

Running OpenPose pipeline:

sima-user@sima-user-machine:~/workspace/pipeline_runner$ python pipeline_runner.py -hi 10.42.0.1 -b 10.42.0.241 -n open-pose

Note

There is a known issue where you have to run the script twice in order for the pipelines to run the first time you launch the script. Just re-run the script and it should show the screen with the video detections.

There is also a limitation in the achieveable Frame rate due to the JIT locker of python on threads.

Additionally, Detr is trained to detect cars. Therefore, the current provided video will show no detections.

Manually Running Application Pipelines

In order to run the pipelines manually we will need:

One window waiting to receive the video with the predictions from the ML pipeline.

One deployed ML Pipeline running on the board.

One stream sending the video to the pipeline from the host machine or a camera feed.

These steps will have to be run in that specific order.

Receiver Window

On your host, open a terminal or tab. Set up the receiver stream. In this example we will use

<HOST_IP>=10.42.0.1and<HOST_RECEIVER_PORT>=9000, this has to match your changes inapplication.jsonlater:sima-user@sima-user-machine:~$ sudo gst-launch-1.0 udpsrc port=<HOST_RECEIVER_PORT> ! application/x-rtp,encoding-name=H264,payload=96 ! rtph264depay ! 'video/x-h264,stream-format=byte-stream,alignment=au' ! avdec_h264 ! fpsdisplaysink sync=0 [sudo] password for sima-user: Setting pipeline to PAUSED ... Pipeline is live and does not need PREROLL ... Pipeline is PREROLLED ... Setting pipeline to PLAYING ... New clock: GstSystemClock

Pipeline Deployment

Connect to your board using the Docker container Palette CLI.

sima-user@docker-image-id:/usr/local/simaai/app_zoo/Gstreamer/PeopleTracker# mpk device connect -t sima@<BOARD_IP_ADDRESS> ℹ Please enter the password for 10.42.0.241 🔐 : 🔗 Connection established to 10.42.0.241 .

Choose your pipeline:

PeopleTracker

sima-user@docker-image-id:/home# PIPELINE_PATH='/usr/local/simaai/app_zoo/Gstreamer/PeopleTracker'

OpenPose

sima-user@docker-image-id:/home# PIPELINE_PATH='/usr/local/simaai/app_zoo/Gstreamer/OpenPose'

YoloV5

sima-user@docker-image-id:/home# PIPELINE_PATH='/usr/local/simaai/app_zoo/Gstreamer/YoloV5'

YoloV7

sima-user@docker-image-id:/home# PIPELINE_PATH='/usr/local/simaai/app_zoo/Gstreamer/YoloV7'

EfficientDet

sima-user@docker-image-id:/home# PIPELINE_PATH='/usr/local/simaai/app_zoo/Gstreamer/EfficientDet'

From the docker copy the file to your workspace, we will create a copy to maintain the original file:

sima-user@docker-image-id:/home# cp $PIPELINE_PATH/application.json $PIPELINE_PATH/application_og.json sima-user@docker-image-id:/home# cp $PIPELINE_PATH/application.json /home/docker/sima-cli/application.json

Give

application.jsonthe right permissions to edit it:sima-user@docker-image-id:/home# chmod 666 /home/docker/sima-cli/application.json

From your host machine use your favorite editor to edit the

application.jsonfile, I will be using VSCode.sima-user@docker-image-id:~# code ~/workspace/application.json

Choose your input option and change the

application.jsonaccordingly:USB Camera

Change

rtspsrc location=<RTSP_SRC>for the following stringudpsrc port=<HOST_SENDER_PORT> ! application/x-rtp,encoding-name=H264,payload=96and a port number, for example let’s useudpsrc port=5000 ! application/x-rtp,encoding-name=H264,payload=96.Change

<HOST_PORT>for a number, for example let’s use9000.Change

<HOST_IP>for the host IP address, in my case it is10.42.0.1.

RTSP Camera Stream

Change

<RTSP_SRC>for the camera IP address, in my case it isrtsp://192.168.132.205/axis-media/media.amp.Change

<HOST_PORT>for a number, for example let’s use9000.Change

<HOST_IP>for the host IP address, in my case it is10.42.0.1.

Video file

Change

<RTSP_SRC>for the video IP address, in my case it isrtsp://10.42.0.1:8554/mystream.Change

<HOST_PORT>for a number, for example let’s use9000.Change

<HOST_IP>for the host IP address, in my case it is10.42.0.1.

Now we will have to create the stream for our video file, first we will run a docker image as a server:

sima-user@sima-user-machine:~$ docker run --name rtsp_server --rm -e MTX_PROTOCOLS=tcp -p 8554:8554 aler9/rtsp-simple-server

Then we will send our video stream:

sima-user@sima-user-machine:~$ ffmpeg -re -nostdin -stream_loop -1 -i <VIDEO_FILE>.mp4 -c:v copy -f rtsp rtsp://{HOST_IP_ADDRESS}:8554/mystream

On the docker, replace the

application.json.sima-user@docker-image-id:/home# cp /home/docker/sima-cli/application.json $PIPELINE_PATH/

Create the

.mpkfile.sima-user@docker-image-id:/home# mpk create -s $PIPELINE_PATH -d $PIPELINE_PATH ℹ Step COMPILE completed successfully. ℹ Step COPY RESOURCE completed successfully ℹ Step RPM BUILD completed successfully. ℹ Successfully created MPK at 'Your/Chosen/Pipeline/Path/project.mpk'

Deploy the pipeline to the board.

sima-user@docker-image-id:/home# mpk deploy -f $PIPELINE_PATH/project.mpk -t <BOARD_IP_ADDRESS> 🚀 Sending MPK to 10.42.0.241... Transfer Progress for project.mpk: 100.00% 🏁 MPK sent successfully! ✔ MPK Deployment is successful for project.mpk

Once the pipeline is deployed if you are using an IP Camera or Video File you should see the video right away. However, if you are using an USB Camera run this command on a new terminal/tab:

sima-user@sima-user-machine:~$ sudo gst-launch-1.0 -vvv v4l2src device=/dev/video0 ! video/x-raw,width=640,height=480,framerate=30/1 ! videoscale ! videoconvert ! video/x-raw, framerate=30/1, format=NV12, width=1280, height=720 ! x264enc tune=zerolatency bitrate=500 speed-preset=superfast ! rtph264pay ! udpsink host=<BOARD_IP_ADDRESS> port=<HOST_SENDER_PORT> Setting pipeline to PAUSED ... Pipeline is live and does not need PREROLL ... Pipeline is PREROLLED ... Setting pipeline to PLAYING ... New clock: GstSystemClock /GstPipeline:pipeline0/GstV4l2Src:v4l2src0.GstPad:src: caps = video/x-raw, width=(int)640, height=(int)480, framerate=(fraction)30/1, format=(string)YUY2, pixel-aspect-ratio=(fraction)1/1, interlace-mode=(string)progressive, colorimetry=(string)2:4:16:1 ... Redistribute latency... 0:00:00

For this pipeline, the USB camera is in

/dev/video0, if your camera is in a different address, change the path appropriately.

Running the YOLO V7 4-Camera Application Pipeline via Ethernet

Steps

Install the Palette on the host machine in the Docker container.

Launch the Docker container.

Flash the MLSoC device with the test image (emmc).

Connect to your board using the Docker container Palette.

sima-user@docker-image-id:/home# mpk device connect -t sima@<BOARD_IP_ADDRESS> ℹ Please enter the password for 10.42.0.241 🔐 : 🔗 Connection established to 10.42.0.241 .

Go to the folder as shown below and download the configuration file for the YOLO V7 4-Camera pipeline.

sima-user@docker-image-id:/home$ cd /usr/local/simaai/app_zoo/Gstreamer/YoloV7_4cams sima-user@docker-image-id:/usr/local/simaai/app_zoo/Gstreamer/YoloV7_4cams$

Edit the

application.jsonconfiguration file and change the rtspsrc ports. This is the command that we are looking to change:"gst": "LD_LIBRARY_PATH='\/data\/simaai\/applications\/YoloV7_4cams\/lib' GST_DEBUG=\"0\" gst-launch-1.0 --gst-plugin-path='\/data\/simaai\/applications\/YoloV7_4cams\/lib' rtspsrc location=<RTSP_SRC_1> ! rtph264depay wait-for-keyframe=true ! h264parse ! 'video\/x-h264,parsed=true,stream-format=(string)byte-stream,alignment=(string)au' ! simaaidecoder name=simaaidecoder1 op-buff-name=simaaidecoder1 dec-width=1280 dec-height=720 dec-fmt=NV12 next-element=CVU op-buff-cached=true ! mux_1. rtspsrc location=<RTSP_SRC_2> ! rtph264depay wait-for-keyframe=true ! h264parse ! 'video\/x-h264,parsed=true,stream-format=(string)byte-stream,alignment=(string)au' ! simaaidecoder name=simaaidecoder2 op-buff-name=simaaidecoder2 dec-width=1280 dec-height=720 dec-fmt=NV12 next-element=CVU op-buff-cached=true ! mux_1. rtspsrc location=<RTSP_SRC_3> ! rtph264depay wait-for-keyframe=true ! h264parse ! 'video\/x-h264,parsed=true,stream-format=(string)byte-stream,alignment=(string)au' ! simaaidecoder name=simaaidecoder3 op-buff-name=simaaidecoder3 dec-width=1280 dec-height=720 dec-fmt=NV12 next-element=CVU op-buff-cached=true ! mux_1. rtspsrc location=<RTSP_SRC_4> ! rtph264depay wait-for-keyframe=true ! h264parse ! 'video\/x-h264,parsed=true,stream-format=(string)byte-stream,alignment=(string)au' ! simaaidecoder name=simaaidecoder4 op-buff-name=simaaidecoder4 dec-width=1280 dec-height=720 dec-fmt=NV12 next-element=CVU op-buff-cached=true ! mux_1. simaaimuxer name=mux_1 ! queue2 ! tee name=source ! queue2 ! process-cvu config=\"\/data\/simaai\/applications\/YoloV7_4cams\/etc\/yolov7_pre_proc.json\" source-node-name=\"simaaidecoder1\" buffers-list=\"simaaidecoder1,simaaidecoder2,simaaidecoder3,simaaidecoder4\" name=\"preproc\" ! process2 config=\"\/data\/simaai\/applications\/YoloV7_4cams\/etc\/yolov7_mla.json\" ! process-cvu config=\"\/data\/simaai\/applications\/YoloV7_4cams\/etc\/yolov7_post_proc.json\" source-node-name=\"mla_yolov7\" buffers-list=\"mla_yolov7\" name=\"postproc\" ! nmsyolov5 config=\"\/data\/simaai\/applications\/YoloV7_4cams\/etc\/yolov7_nms.json\" ! tee name=nms_out ! queue2 ! overlay_1. source. ! queue2 ! simaai-overlay2 name=overlay_1 width=1280 height=720 format=1 render-info=\"input::simaaidecoder1,bboxy::nmsyolov7\" render-rules=\"bboxy\" input-format=\"NV12\" labels-file=\"\/data\/simaai\/applications\/YoloV7_4cams\/share\/overlay\/labels.txt\" ! 'video\/x-raw,width=(int)1280,height=(int)720,format=(string)NV12,framerate=(fraction)30\/1' ! simaaifilter ! simaaiencoder enc-width=1280 enc-height=720 enc-bitrate=4000 name=simeaiencoder1 ! h264parse ! rtph264pay ! udpsink host=<UDP_HOST> port=\"<UDP_PORT_1>\" nms_out. ! queue2 ! overlay_2. source. ! queue2 ! simaai-overlay2 name=overlay_2 width=1280 height=720 format=1 render-info=\"input::simaaidecoder2,bboxy::nmsyolov7\" render-rules=\"bboxy\" input-format=\"NV12\" labels-file=\"\/data\/simaai\/applications\/YoloV7_4cams\/share\/overlay\/labels.txt\" ! 'video\/x-raw,width=(int)1280,height=(int)720,format=(string)NV12,framerate=(fraction)30\/1' ! simaaifilter ! simaaiencoder enc-width=1280 enc-height=720 enc-bitrate=4000 name=simaaiencoder2 ! h264parse ! rtph264pay ! udpsink host=<UDP_HOST> port=\"<UDP_PORT_2>\" nms_out. ! queue2 ! overlay_3. source. ! queue2 ! simaai-overlay2 name=overlay_3 width=1280 height=720 format=1 render-info=\"input::simaaidecoder3,bboxy::nmsyolov7\" render-rules=\"bboxy\" input-format=\"NV12\" labels-file=\"\/data\/simaai\/applications\/YoloV7_4cams\/share\/overlay\/labels.txt\" ! 'video\/x-raw,width=(int)1280,height=(int)720,format=(string)NV12,framerate=(fraction)30\/1' ! simaaifilter ! simaaiencoder enc-width=1280 enc-height=720 enc-bitrate=4000 name=simaaiencoder3 ! h264parse ! rtph264pay ! udpsink host=<UDP_HOST> port=\"<UDP_PORT_3>\" nms_out. ! queue2 ! overlay_4. source. ! queue2 ! simaai-overlay2 name=overlay_4 width=1280 height=720 format=1 render-info=\"input::simaaidecoder4,bboxy::nmsyolov7\" render-rules=\"bboxy\" input-format=\"NV12\" labels-file=\"\/data\/simaai\/applications\/YoloV7_4cams\/share\/overlay\/labels.txt\" ! 'video\/x-raw,width=(int)1280,height=(int)720,format=(string)NV12,framerate=(fraction)30\/1' ! simaaifilter ! simaaiencoder enc-width=1280 enc-height=720 enc-bitrate=4000 name=simeaiencoder4 ! h264parse ! rtph264pay ! udpsink host=<UDP_HOST> port=\"<UDP_PORT_4>\""

We will change the source streams strings from

<RTSP_SRC_1>to<RTSP_SRC_4>for an incoming rtsp stream, it can be the same stream like in my case or a different stream. I will usertsp://192.168.89.17:8554/mystream0for all 4. For the uspsink happening on the host, let’s change<HOST_IP>for the Host IP Address, in my case10.0.42.1and let’s use8220for<HOST_PORT_1>,8221for<HOST_PORT_2>,8222for<HOST_PORT_3>,8223for<HOST_PORT_4>.Run

mpk create.sima-user@docker-image-id:/usr/local/simaai/app_zoo/Gstreamer/YoloV7_4cams$ mpk create -s . -d . ℹ Step a65-apps COMPILE completed successfully. ℹ Step COMPILE completed successfully. ℹ Step COPY RESOURCE completed successfully ℹ Step RPM BUILD completed successfully. ℹ Successfully created MPK at '/usr/local/simaai/app_zoo/Gstreamer/YoloV7_4cams/project.mpk'

Run

gst-launchon the host for getting an output video stream from the application on the 4 terminals with differentUDP_PORTSused in theapplication.jsonfile.sima-user@sima-user-machine:/home$ GST_DEBUG=0 gst-launch-1.0 udpsrc port=<HOST_PORT_1..4> ! application/x-rtp,encoding-name=H264,payload=96 ! rtph264depay ! 'video/x-h264,stream-format=byte-stream,alignment=au' ! avdec_h264 ! fpsdisplaysink sync=0

Deploy the mpk project on the connected device.

sima-user@docker-image-id:/usr/local/simaai/app_zoo/Gstreamer/YoloV7_4cams$ mpk deploy -f project.mpk -t <BOARD_IP_ADDRESS> 🚀 Sending MPK to 192.168.135.155... Transfer Progress for project.mpk: 100.00% 🏁 MPK sent successfully! ✔ MPK Deployed! ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 100% 0:00:00 ✔ MPK Deployment is successful for project.mpk.

Running the YOLO V8 4-Camera Application Pipeline via Ethernet

Steps

Install the Palette CLI SDK on the host machine in the Docker container.

Launch the Docker container.

Flash the MLSoC device with the test image (emmc).

Connect to the MLSoC Developer Board from the Palette CLI SDK.

sima-user@docker-image-id:/home$ mpk device connect -t sima@<board_ip_address> ℹ Please enter the password for <board_ip_address> 🔐 : 🔗 Connection established to <board_ip_address> .

Go to the folder as shown below and download the configuration file for the YOLO V8 4-Camera pipeline.

sima-user@docker-image-id:/home$ cd /usr/local/simaai/app_zoo/Gstreamer/YoloV8_4cams

Edit the

application.jsonconfiguration file and changertspsrc,uspsinkhost IP and port in the GST command.

In particular the pipeline uses 4 RTSP sources as input and generate 4 independent UDP output streams. Find out RTSP URL for the 4 IP cameras and replace <RTSP_SRC_1>, <RTSP_SRC_2>, <RTSP_SRC_3> & <RTSP_SRC_4> in the pipeline with respective Camera URLs. for example RTSP URL for Hikvision cameras looks something like

rtsp://<username>:<password>@<IP address of device>:<RTSP port>/Streaming/channels/<channel_number><stream number>. Similarly, to send output stream to host present on the same network, IP address of the host and a port number is required per stream.sima-user@docker-image-id:/home$ "gst" : "LD_LIBRARY_PATH=\/data\/simaai\/applications\/YoloV8_4cams\/lib GST_DEBUG=3 gst-launch-1.0 --gst-plugin-path=\"\/data\/simaai\/applications\/YoloV8_4cams\/lib\" rtspsrc location=<RTSP_SRC_1> ! rtph264depay wait-for-keyframe=true ! h264parse ! 'video\/x-h264,parsed=true,stream-format=(string)byte-stream,alignment=(string)au' ! simaaidecoder name=simaaidecoder1 op-buff-name=simaaidecoder1 dec-width=1280 dec-height=720 dec-fmt=NV12 next-element=CVU op-buff-cached=true ! mux_1. rtspsrc location=<RTSP_SRC_2> ! rtph264depay wait-for-keyframe=true ! h264parse ! 'video\/x-h264,parsed=true,stream-format=(string)byte-stream,alignment=(string)au' ! simaaidecoder name=simaaidecoder2 op-buff-name=simaaidecoder2 dec-width=1280 dec-height=720 dec-fmt=NV12 next-element=CVU op-buff-cached=true ! mux_1. rtspsrc location=<RTSP_SRC_3> ! rtph264depay wait-for-keyframe=true ! h264parse ! 'video\/x-h264,parsed=true,stream-format=(string)byte-stream,alignment=(string)au' ! simaaidecoder name=simaaidecoder3 op-buff-name=simaaidecoder3 dec-width=1280 dec-height=720 dec-fmt=NV12 next-element=CVU op-buff-cached=true ! mux_1. rtspsrc location=<RTSP_SRC_4> ! rtph264depay wait-for-keyframe=true ! h264parse ! 'video\/x-h264,parsed=true,stream-format=(string)byte-stream,alignment=(string)au' ! simaaidecoder name=simaaidecoder4 op-buff-name=simaaidecoder4 dec-width=1280 dec-height=720 dec-fmt=NV12 next-element=CVU op-buff-cached=true ! mux_1. simaaimuxer name=mux_1 ! queue2 ! tee name=source ! queue2 ! process-cvu config=\"\/data\/simaai\/applications\/YoloV8_4cams\/etc\/preproc.json\" source-node-name=\"simaaidecoder1\" buffers-list=\"simaaidecoder1,simaaidecoder2,simaaidecoder3,simaaidecoder4\" name=\"preproc\" ! process2 config=\"\/data\/simaai\/applications\/YoloV8_4cams\/etc\/yolov8_mla.json\" ! process-cvu config=\"\/data\/simaai\/applications\/YoloV8_4cams\/etc\/postproc.json\" source-node-name=\"yolov8_MLA\" buffers-list=\"yolov8_MLA\" name=\"postproc\" ! yolov8_box_composer config=\"\/data\/simaai\/applications\/YoloV8_4cams\/etc\/box_composer.json\" name=\"box_composer\" ! tee name=nms_out ! queue2 ! overlay_1. source. ! queue2 ! simaai-overlay2 name=overlay_1 width=1280 height=720 format=1 render-info=\"input::simaaidecoder1,bbox::yolov8_box_composer\" render-rules=\"bbox\" input-format=\"NV12\" labels-file=\"\/data\/simaai\/applications\/YoloV8_4cams\/etc\/yolov8_labels\" ! 'video\/x-raw,width=(int)1280,height=(int)720,format=(string)NV12,framerate=(fraction)30\/1' ! simaaifilter ! simaaiencoder enc-width=1280 enc-height=720 enc-bitrate=4000 name=simeaiencoder1 ! h264parse ! rtph264pay ! udpsink host=<HOST_IP> port=<HOST_PORT_1> nms_out. ! queue2 ! overlay_2. source. ! queue2 ! simaai-overlay2 name=overlay_2 width=1280 height=720 format=1 render-info=\"input::simaaidecoder2,bbox::yolov8_box_composer\" render-rules=\"bbox\" input-format=\"NV12\" labels-file=\"\/data\/simaai\/applications\/YoloV8_4cams\/etc\/yolov8_labels\" ! 'video\/x-raw,width=(int)1280,height=(int)720,format=(string)NV12,framerate=(fraction)30\/1' ! simaaifilter ! simaaiencoder enc-width=1280 enc-height=720 enc-bitrate=4000 name=simaaiencoder2 ! h264parse ! rtph264pay ! udpsink host=<HOST_IP> port=<HOST_PORT_2> nms_out. ! queue2 ! overlay_3. source. ! queue2 ! simaai-overlay2 name=overlay_3 width=1280 height=720 format=1 render-info=\"input::simaaidecoder3,bbox::yolov8_box_composer\" render-rules=\"bbox\" input-format=\"NV12\" labels-file=\"\/data\/simaai\/applications\/YoloV8_4cams\/etc\/yolov8_labels\" ! 'video\/x-raw,width=(int)1280,height=(int)720,format=(string)NV12,framerate=(fraction)30\/1' ! simaaifilter ! simaaiencoder enc-width=1280 enc-height=720 enc-bitrate=4000 name=simaaiencoder3 ! h264parse ! rtph264pay ! udpsink host=<HOST_IP> port=<HOST_PORT_3> nms_out. ! queue2 ! overlay_4. source. ! queue2 ! simaai-overlay2 name=overlay_4 width=1280 height=720 format=1 render-info=\"input::simaaidecoder4,bbox::yolov8_box_composer\" render-rules=\"bbox\" input-format=\"NV12\" labels-file=\"\/data\/simaai\/applications\/YoloV8_4cams\/etc\/yolov8_labels\" ! 'video\/x-raw,width=(int)1280,height=(int)720,format=(string)NV12,framerate=(fraction)30\/1' ! simaaifilter ! simaaiencoder enc-width=1280 enc-height=720 enc-bitrate=4000 name=simeaiencoder4 ! h264parse ! rtph264pay ! udpsink host=<HOST_IP> port=<HOST_PORT_4>" "

Run

mpk create.sima-user@docker-image-id:/usr/local/simaai/app_zoo/Gstreamer/YoloV8_4cams$ mpk create -s . -d . ℹ Step a65-apps COMPILE completed successfully. ℹ Step COMPILE completed successfully. ℹ Step COPY RESOURCE completed successfully ℹ Step RPM BUILD completed successfully. ℹ Successfully created MPK at '/usr/local/simaai/app_zoo/Gstreamer/YoloV8_4cams/project.mpk'

Run

gst-launchon the host for getting an output video stream from the application on the 4 terminals with different PORTS used in theapplication.jsonfile. For playing all the four streams, run below command with 4 different port number matching toudpsinkport number provided on the board so thatudpsrcplugin can listen to the specific ports.sima-user@sima-user-machine:/home$ GST_DEBUG=0 gst-launch-1.0 udpsrc port=<HOST_PORT> ! application/x-rtp,encoding-name=H264,payload=96 ! rtph264depay ! 'video/x-h264,stream-format=byte-stream,alignment=au' ! avdec_h264 ! fpsdisplaysink sync=1

Deploy the mpk project on the connected device.

sima-user@docker-image-id:/usr/local/simaai/app_zoo/Gstreamer/YoloV8_4cams$ mpk deploy -f project.mpk -t <board_ip_address> 🚀 Sending MPK to <board_ip_address>... Transfer Progress for project.mpk: 100.00% 🏁 MPK sent successfully! ✔ MPK Deployed! ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 100% 0:00:00 ✔ MPK Deployment is successful for project.mpk.

Generic Aggregator Plugin

The generic Aggregator is a plugin template and not a complete plugin. This template is a tool for creating custom plugins for GStreamer application pipelines.

Prerequisites

The following requirements must be met before you run any application pipeline.

The Palette software is installed on your host machine by following Software Installation.

GStreamer is installed on your host machine by following GStreamer installation.

The Palette CLI is running on your host machine using

python3 start.pyand you are in the Docker terminal.A device is connected to your host machine either over Ethernet or PCIe.

Steps

Launch the Docker container.

Flash the MLSoC device with the test image (emmc).

Connect to the MLSoC Developer Board from the Palette CLI SDK.

sima-user@docker-image-id:/home$ mpk device connect -t sima@<BOARD_IP_ADDRESS> 🔗 Connection established to 192.168.135.218 .

Follow the path shown below and download the Generic Aggregator Demo.

sima-user@docker-image-id:/home$ cd /usr/local/simaai/app_zoo/Gstreamer/GenericAggregatorDemo

Edit the json file

plugins/simple_overlay/cfg/overlay.jsonand change"dump_data": 0 to "dump_data": 1.{"version": 0.1, "node_name": "overlay", "memory": { "cpu": 0, "next_cpu": 0 }, "system": { "out_buf_queue": 1, "debug": 0, "dump_data": 0 }, "buffers": { "input": [ { "name": "image", "size": 894999 }, { "name": "boxes", "size": 32 } ], "output": { "size": 211527 } }}

Run the

mpk createcommand.sima-user@docker-image-id:/usr/local/simaai/app_zoo/Gstreamer/GenericAggregatorDemo$ mpk create -s . -d . ℹ Step a65-apps COMPILE completed successfully. ℹ Step COMPILE completed successfully. ℹ Step COPY RESOURCE completed successfully ℹ Step RPM BUILD completed successfully. ✔ Successfully created MPK at '/usr/local/simaai/app_zoo/Gstreamer/GenericAggregatorDemo/project.mpk'

Deploy the mpk on the connected device.

sima-user@docker-image-id:/usr/local/simaai/app_zoo/Gstreamer/GenericAggregatorDemo$ mpk deploy -f project.mpk ℹ killing older mpk with the same name deployed on 192.168.135.218 ✔ MPK with id: efficientdet_Pipeline is successfully killed on 192.168.135.218 💀 🚀 Sending MPK to 192.168.135.218... Transfer Progress for project.mpk: 100.00% 🏁 MPK sent successfully! ✔ MPK Deployed! ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 100% 0:00:00 ✔ MPK Deployment is successful for project.mpk.The

"dump_data": 1flag dumps the output images under/tmp/with name overlay-<frameID>.out.

Pull any frame from the device and view it in any image viewer application on the host machine.

Observe the output image for a pair of bounding boxes drawn on an image as shown below.

Note

This is a demo application therefore the location of the bounding boxes has no meaning. They are drawn at a static location.

Conclusion

We started with an overview of the application pipeline and the Developer setup then moving onto briefly understanding the Palette SDK and the associated MPK and the two Development paths possible.

Within GStreamer development flow we first ran a simplistic GStreamer-based pipeline directly on the board while understanding the individual components of the command line and the associated plugins. We then repeated the same exercise with an actual real-world application prepackaged within the SDK and concluded with how it can be created, deployed, and modified if and when needed.

Modifying the ingress and the egress paths within an application pipeline is a good skillset to develop and once complex real-world GStreamer pipelines are included in SiMa’s Developer Journey document, breaking them down into simpler building blocks and eventually changing them and deploying them becomes easier.

Building custom pipelines from scratch requires the ability to re-configure/update individual GStreamer plugins which will be covered in a future version of this document.