Model Compatibility Guide

This guide is provided to assist developers who want to identify what parts of an ONNX model to edit using the graph surgery API, or to explain why an ONNX model is not supported or not accelerated in the Model SDK. This guide pertains to models that use ONNX operator versions 16 and 17.

This guide is a conservative description of conditions in which individual operations are supported and accelerated. The ModelSDK performs automatic optimizations that may convert apparently unsupported operations to a form that is supported or accelerated. Often, operations that are found in an ONNX model do not correspond one-to-one with operations that are compiled for the MLSoC. Nevertheless, for performing graph surgery, it is useful to understand support in terms of the ONNX operators that are involved in graph surgery.

Terminology

Term |

Definition |

|---|---|

Operator |

Refers to an ONNX operator included in opset 16, 17, or a TFLite operator of TFLite version 2.10.0 |

Operation |

|

Model |

An inference model exclusively consisting of operators from ONNX Opset version 16, 17, or operators pre-quantized with TFLite v2.10.0 |

ONNX |

Any mention of ONNX refers to ONNX version 1.11 or 1.12 which corresponds to ONNX opset version 16 or 17, respectively. Testing has been performed using ONNX runtime version 1.15.0 |

TFLite |

Any mention of TFLite refers to TFLite version 2.10.0 or a TFLite operator. |

Note

An operator and an operation can be thought of as an ‘abstract operator’ and a ‘concrete operator’, respectively.

Supported Operations

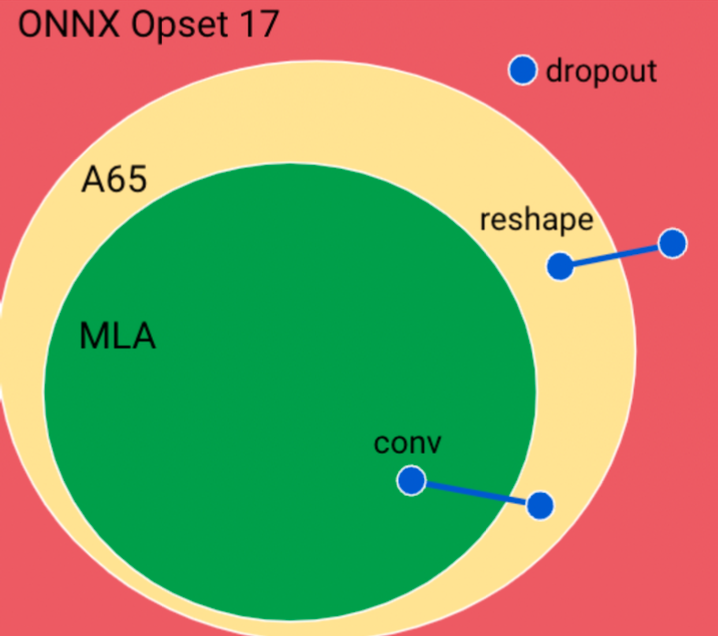

An operation is called supported when it can be compiled by the Model SDK, otherwise it is called unsupported. A supported operation is called accelerated when it can be compiled to run on the MLA. Each operation falls into one of three categories: unsupported, supported and not accelerated, and supported and accelerated.

Whether an operation is supported or accelerated may depend on its operator, its attributes, the type and shape of its inputs and outputs, whether some inputs are constants, and the value of those constant inputs. The following sections break down the requirements as

General restrictions on which operators are supported (Unsupported operators),

General requirements on type and shape for operations to be supported (A65 supported operators),

General requirements for operations to be accelerated (MLA Supported Operators),

And requirements on attributes, types, shapes, and constants pertaining to individual operators (Individual operators).

Custom Implementation

If an operator falls under the No Support category, it can be implemented using custom code on A65/EV74. Only automatic compilation scenarios are considered where an operator will fall into the categories described above.

Note

Any operation which is supported by the MLA is also supported by the A65.

Virtually all operators in categories 2 and 3 above also have instantiations which are not supported. This is true even for relatively simple operators. For example, depending on the tensor element type used, the Add operator is either supported on the MLA or only supported on the A65, or not supported.

Unsupported Operators

The ONNX Opset 17 (a superset of Opset 16) consists of a total of 200 operators. Of these, 72 are unsupported. This section describes the specific criteria used to identify the unsupported operators. We use the schema of ONNX operators to programmatically extract the vast majority of the information needed to determine the support level of each operator.

An ONNX operator is not supported if one or more of the following criteria are met:

The operator is deprecated. That is,

schema.deprecatedisTruefor the operator schema.The operator is experimental. That is,

schema.support_levelis not equal toonnx.defs.OpSchema.SupportType.COMMON.The operator belongs to the

ai.onnx.preview.trainingor theai.onnx.ml domain, as defined byschema.domain. These two domains contain operators specific to training and tree-based ML learning, respectively, and are therefore not relevant.The operator applies to training only (and is not in the

ai.onnx.preview.training domain).The operator is specific to RNNs, which do not apply to computer vision.

The operator defines control flow.

The operator has a non-optional (i.e., required as a Single or Variadic tensor) which only supports sequences, maps, or tensor element types which are not supported. See the A65 Supported Operators section. ADDLINK

The operator is not supported by TVM, which we use internally. This only excludes four operators which are rarely used. There are no restrictions on attribute values. We support all attribute values supported by ONNX. In other words, the element type restrictions mentioned earlier do not apply to attributes.

A65 Supported Operators

When a model meets the requirements below, it is supported by A65. The element type of each tensor must be static and cannot change at runtime.

Of the 16 tensor element types, 12 are supported. The unsupported types are:

TensorProto.STRING

TensorProto.COMPLEX64

TensorProto.COMPLEX128

Operator attribute values must be static and known at compile time. That is, an attribute cannot be changed at runtime.

Some operators are inherently dynamic in nature in the sense that input tensor values impact model properties such as output tensor shapes and element types. For example, for the Reshape operator, the values of the input tensor shape defines the shape of the output tensor reshaped.

The A65 provides limited support for dynamic operators, subject to the above constraints.

All tensors in a model—except for constants—must share the same batch size. In other words, their first dimensions must be the same, and updating this batch size consistently across all tensors must yield a valid model.

MLA Supported Operators

Any operation must satisfy the following requirements to be supported by the MLA, that is, these requirements are necessary, but not sufficient:

The operation must be supported by the A65. In other words, all A65 support requirements also apply to the MLA supported operators.

All properties of the operation must be static and known at compile time. However, a dynamic operator such as Reshape is still supported on the MLA in the special case where the operator instantiation is static. For example, Reshape is supported if its input shape tensor is a constant.

All input tensors must be 4D or 5D, except for the ones explicitly listed in the table below. As a consequence, numpy-style broadcasting, as described in broadcasting in ONNX, is not supported.

All tensors, of ONNX models, must have the element type TensorProto.FLOAT32. The table below shows the operators and input tensors excluded from the 4D requirement.

The table below shows the operators and the input tensors excluded from the 4D requirement.

Operator |

Input Tensors Excluded from the 4D Requirement |

|---|---|

Add, Sub |

Either A or B can be a scalar constant |

Conv |

B |

ConvTranspose |

B |

Mul |

Either A or B can be a scalar constant |

Pad |

Pads, axes |

Resize |

Scales, sizes |

Slice |

Tind |

The MLA supports the NHWC layout format only. However, this is not a user-facing limitation since layout transforms are automatically inserted as needed.

Individual Operators

A single ONNX operator maps to one or more kernels, as described in the section above on MLA Supported Categories. The page lists all operators which are fully supported on the MLA, that is, each of these operators maps to a kernel graph in which all kernels will execute on the MLA.

For some operators, the MLA support requires additional constraints which must be met (as specified in the table below) using the pseudo Python syntax. There is one requirement per line. All requirements must be met, that is, they must be combined using logical “and.”