Application Pipeline

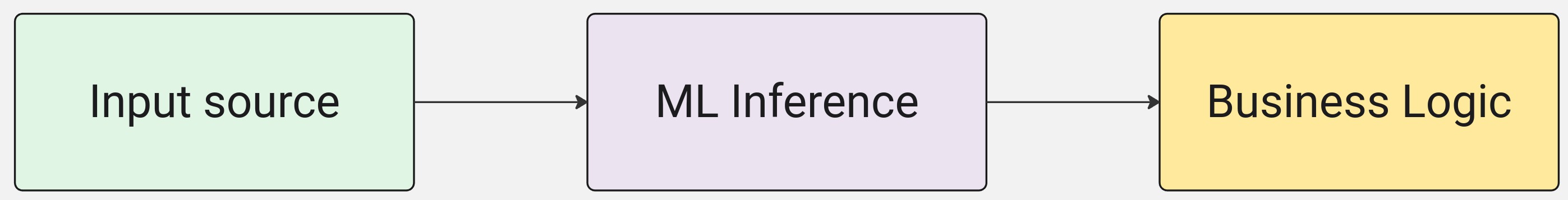

We will start by breaking down the key components of a Machine Learning (ML) application and defining the tasks used throughout this guide. Each stage of the application maps to different hardware functional blocks within the MLSoC, and requires multiple steps to set up the app. A solid understanding of the architecture is essential for efficient development, let us outline a basic pipeline architecture.

A Typical ML Application

If we were to look at a very simple classification ML application, it would look something like this:

Input Source: Any input into the application, could be an RTSP stream, a video file, image files, audio stream, and so on.

ML Inference: Running the ML model to classify the input in some way.

Business Logic: Logic in response to the ML inference such as saving/displaying information, PLC communication, network storage, and so on.

ML Preprocessing and Postprocessing

In reality, ML models require very specific preprocessing and postprocessing steps. If we break this generic case down a bit more, we get the following:

ML Preprocess: Is the specific preprocessing required for the ML model being deployed (resizing, normalization, etc.).

ML Inference: Is the actual neural network model inference processing.

ML Postprocess: Is the post processing required for the output of the ML Inference step (softmax, argmax, box-decoding, etc.).

ResNet50 Classification Application

What is Resnet50?

ResNet-50 is a deep convolutional neural network renowned for its 50-layer architecture and the introduction of residual learning through skip connections. This design addresses challenges like vanishing gradients, enabling the training of significantly deeper networks. Since its introduction, ResNet-50 has become a foundational model in computer vision, excelling in tasks such as image classification, object detection, and segmentation. Its architecture has also influenced various deep learning frameworks and applications.

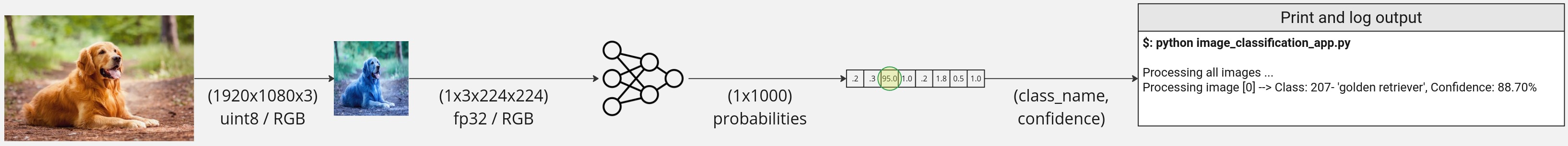

Let’s take ResNet50 as an example to showcase the workflow. This simple classification application will take an image as input and output the detected object type along with a confidence score.

This application pipeline steps will be as follows:

Image(s) Source: Will

readall images from a local directory.ResNet50 Preprocessing: Will

resizethe image to 224x224 (height x width),scaleandnormalizethe image values to be between 0.0 -> 1.0.ResNet50 Inference: Will run the resized and scaled image through ResNet50 neural network inference.

Resnet50 Postprocessing: Will perform

argmaxon all the output values and pick the highest confidence category.Business Logic: Will

printthe category, and the confidence score for each result received.

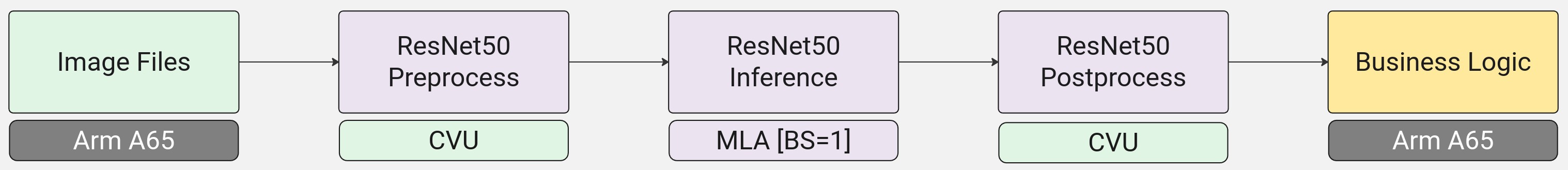

Mapping the ResNet50 Application to the MLSoC

Now that we understand the end-to-end application and it’s components, we can review how to map the different application stages to the MLSoC hardware function blocks.

Image(s) Source: Will map to the

Arm A65will read images from storage.ResNet50 Preprocessing: Will use one of SiMa.ai’s CVU Graph Library (CVU Graphs) to perform

resize,scalingandnormalizationin one go on theEV74 CVU.ResNet50 Inference: Will run the resized and scaled image through the

MLAfor performant and efficientinference.Resnet50 Postprocessing: Will use one of SiMa.ai’s CVU Graph Library (CVU Graphs) to perform

detesselation and dequantization(more on this later) on theEV74 CVU.Business Logic: Will print the output on

Arm A65using regularstd::cout.

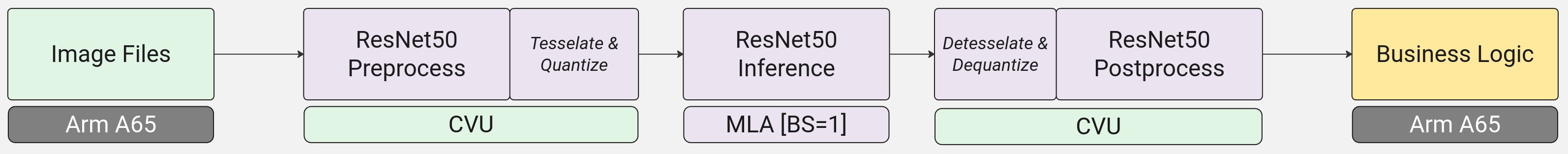

Tesselation and Quantization

Tessellation refers to breaking an image into smaller tiles or patches to improve processing efficiency. Think of it like cutting a large puzzle into smaller pieces so each part can be analyzed separately. On the MLA, tessellation specifically refers to converting input and output feature maps into the required memory layout for efficient computation.

Quantization reduces numerical precision (e.g., from 32-bit floating point to 8-bit integer) to improve computation speed and reduce memory usage while minimizing accuracy loss. The MLA processes data in integer format, so inputs and outputs must go through quantization (floating point to integer for MLA input) or dequantization (integer to floating point for MLA output) to ensure compatibility with the hardware.

The MLA on the MLSoC has specific preprocessing and postprocessing requirements, including quantization and tessellation. SiMa.ai’s EV74 CVU graphs are designed to handle these operations efficiently, simplifying development. Understanding these processes is essential for debugging and optimizing end-to-end applications.

Now that we have a clear understanding of the application we want to run on the SiMa MLSoC, we are now ready to take steps to try some demos and then build an end-to-end application.