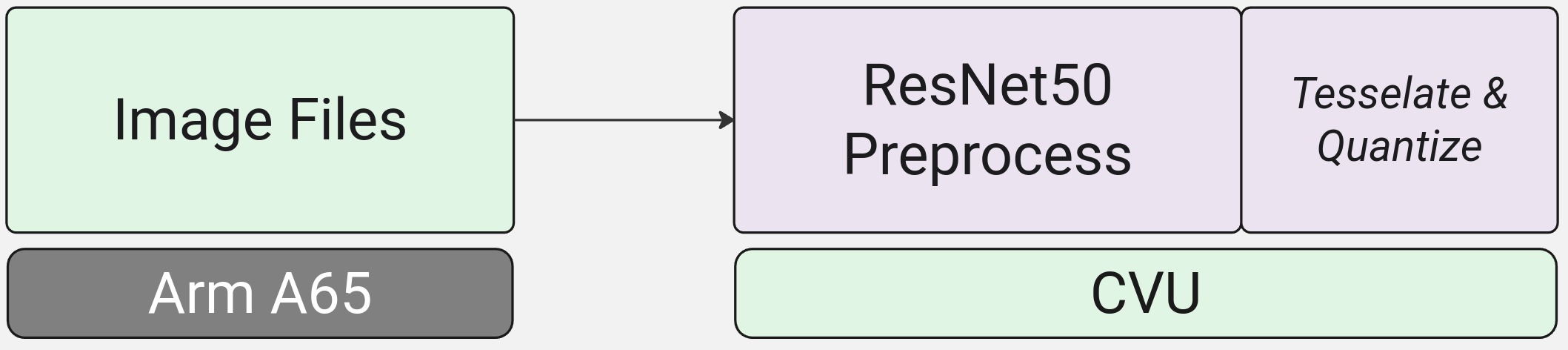

Step 1: Run and verify output of simaaiprocesscvu (CVU preprocess)

In this section we will simply receive the read image buffer from the simaaisrc and preprocess it using the CVU in

preparation before sending it to the MLA.

Preprocessing of an image will do two things:

Replicate the preprocessing expected by the network. In this case ->

resize, scale, normalizeQuantization and tesselation

The MLA performs operations in INT8 or INT16, and thus expects that frames are

quantizedbefore being processed.The MLA also assumes a specific memory layout for the input frames, in this guide, we refer to this as

tesselation.

Note

The

simaaiprocesscvuwill perform preprocessing, quantization and tesselation, so when evaluating the output against a known reference, we will need to remember that the output pixels from thesimaaiprocesscvurepresents quantized pixels in a slightly altered memory format. This is why comparing the first few pixels is useful.As mentioned in a previous section, we need to perform the following steps in order to configure the EV74 CVU and run it correctly:

Choose the CVU graph we want to run (graph SIMA_GENERIC_PREPROC in this case)

Create the JSON configuration file with the right parameters for this application and the target EV74 CVU graph

Develop and compile a configuration application for it

Run the configuration application before executing your GStreamer pipeline with the JSON configuration file

Run your GStreamer pipeline by specifying the

simaaiprocesscvuplugin with the JSON configuration file

Each section below will break down each step in order to run the source image read from the file through the EV74 CVU graph.

Important

For a better developer experience, a ‘configuration application’ and many of the current parameters are to be deprecated or simplified in upcoming releases.

Choosing the CVU function

For our example, we will be choosing the SIMA_GENERIC_PREPROC function from the list of CVU available kernels in the CVU Graphs.

This function will be useful because it performs resize, scaling, normalization, quantization and tesselation operations in one step. Many networks can use this kernel as the preprocessor,

so it is good to get familiar with it.

Creating the JSON configuration file

A JSON configuration file is used in 2 steps of the runtime:

The JSON is used by the CVU configuration application to configure the parameters being set.

The same JSON file can be used to configure the

simaaiprocesscvuplugin at runtime when the application is launched.

To create the JSON file, you can refer to SIMA_GENERIC_PREPROC Parameters and Example Configuration section.

Developing and compiling the configuration application

The CVU needs to be configured with the graph that it will run and along with the corresponding parameters for that graph. Currently, this needs to be done explicitly by the developer via a C++ application. Here, we present an example configuration application that works for the ResNet50 example for the SIMA_GENERIC_PREPROC graph.

Go to the SIMA_GENERIC_PREPROC section and either download the pre-written and pre-compiled configuration application, or follow the instructions to re-write or edit the source.

To compile the application on Palette, please refer to the EV74 CVU How to compile CVU Configuration Application? section.

Copying the configuration application and the JSON configuration to the board

Once the application has been compiled or downloaded, we need to copy it to the board.

On the MLSoC, let’s create a directory for our configuration application and JSON files:

davinci:~$ cd /home/sima/resnet50_example_app davinci:~/resnet50_example_app$ mkdir app_configs davinci:~/resnet50_example_app$ ls app_configs data gst_simaaisrc_output.txt run_pipeline.sh

Next, let’s also create the JSON file for the Configuration parameters we need for the CVU found in the previous section. From the MLSoC:

davinci:~/resnet50_example_app$ cd app_configs davinci:~/resnet50_example_app/app_configs$

Run the following command:

echo '{ "version": 0.1, "node_name": "generic_preproc", "simaai__params": { "params": 15, "cpu": 1, "next_cpu": 4, "no_of_outbuf": 1, "ibufname": "null", "graph_id": 200, "img_width": 1920, "img_height": 1080, "input_width": 1920, "input_height": 1080, "output_width": 224, "output_height": 224, "scaled_width": 224, "scaled_height": 224, "batch_size": 1, "normalize": 1, "rgb_interleaved": 1, "aspect_ratio": 0, "tile_width": 32, "tile_height": 16, "input_depth": 3, "output_depth": 3, "quant_zp": -14, "quant_scale": 53.59502566491281, "mean_r": 0.406, "mean_g": 0.456, "mean_b": 0.485, "std_dev_r": 0.225, "std_dev_g": 0.224, "std_dev_b": 0.229, "input_type": 2, "output_type": 0, "scaling_type": 3, "offset": 150528, "padding_type": 4, "input_stride": 0, "output_stride": 0, "output_dtype": 0, "debug": 0, "out_sz": 301056, "dump_data": 1 } }' > genpreproc_200_cvu_cfg_params.json

Note

For a full explanation of each parameter, please refer to SIMA_GENERIC_PREPROC

params: 15 –> Internal use only, do not change.cpu: 1 –> Current HW IP it will execute on (1 == CVU)next_cpu: 4 –> Next HW IP the output will execute on (4 == MLA)no_of_outbuf: 1 –> Internal use only, do not change.ibufname: “null” –> Internal use only, do not change.graph_id: 200 –> The kernel ID we are targeting, in this case 200 == SIMA_GENERIC_PREPROCimg_width: 1920 –> The width size of our input image from thesimaaisrcplugin (will be deprecated in a future)img_height: 1080 –> The height size of our input image from thesimaaisrcplugin (will be deprecated in a future)input_width: 1920 –> The width size of our input image from thesimaaisrcinput_height: 1080 –> The height size of our input image from thesimaaisrcoutput_width: 224 –> Not applicable since we are usingaspect_ratioset to Falseoutput_height: 224 –> Not applicable since we are usingaspect_ratioset to Falsescaled_width: 224 –> The width of the resized output image. ResNet50 expects images of 224x224scaled_height: 224 –> The width of the resized output image. ResNet50 expects images of 224x224batch_size: 1 –> The batch size we compiled the model fornormalize: 1 –> Set normalization to true.rgb_interleaved: 1 –> Output image should be tessellated(0) or not(1). Set it to 1 as explicit tessellation kernel is invoked in the graph.aspect_ratio: 0 –> 0 means –> Output image height and width will be same as scaled_height & scaled_width values.tile_width: 32 –> Width of the Slice/Tile for tessellation from model tar.gz *_mpk.json ‘slice_width’ tesselation transformtile_height: 16 –> Height of the Slice/Tile for tessellation from model tar.gz *_mpk.json ‘slice_height’ tesselation transforminput_depth: 3 –> The number of channels in the input imageoutput_depth: 3 –> The number of channels in the output imagequant_zp: -14 –> Quantization zero point from model tar.gz *_mpk.json ‘channel_params’[1]quant_scale: 53.59502566491281 –> Quantization scale from model tar.gz *_mpk.json ‘channel_params’[0]mean_r: 0.406 –> Dataset mean for Channel R to be used for normalization (same as reference app preprocessing function)mean_g: 0.456 –> Dataset mean for Channel G to be used for normalization (same as reference app preprocessing function)mean_b: 0.485 –> Dataset mean for Channel B to be used for normalization (same as reference app preprocessing function)std_dev_r: 0.225 –> Dataset std. deviation for Channel R to be used for normalization (same as reference app preprocessing function)std_dev_g: 0.224 –> Dataset std. deviation for Channel G to be used for normalization (same as reference app preprocessing function)std_dev_b: 0.229 –> Dataset std. deviation for Channel B to be used for normalization (same as reference app preprocessing function)input_type: 2 –> Input type is RGB from the loaded imageoutput_type: 0 –> Output type is RGB to the MLAscaling_type: 3 –> Bilinear scaling (same as reference app preprocessing function)offset: 150528 –> Size of tesselated output, can be extracted from model tar.gz *_mpk.json {“plugins” -> “name”: “*_tesselation_transform” -> “output_nodes” -> “size”}padding_type: 4 –> Center padding, but given our resize, there is no padding being appliedinput_stride: 0 –> No strides neededoutput_stride: 0 –> No strides neededoutput_dtype: 0 –> int8 output to the MLAdebug: 0 –> No debug enabledout_sz: 301056 –> out_sz = tesselated output + output_size where output_size is the expected tensor shape (Resized to 224x224x3 would be an output size of 150528 bytes)dump_data: 1 –> Dumpy the output tensor so that we can debug

From the Palette Docker container on the development host machine, let’s scp the configuration application (

genpreproc_200_cvu_cfg_app) binary to the same folder.sima-user@docker-image-id:/home/docker/sima-cli/ev74_cgfs/sima_generic_preproc/build$ scp genpreproc_200_cvu_cfg_app sima@<IP address of MLSoC>:/home/sima/resnet50_example_app/app_configs genpreproc_200_cvu_cfg_app 100% 65KB 9.7MB/s 00:00

Note

/home/docker/sima-cli/ev74_cgfs/sima_generic_preproc/buildis the directory where the sima_generic_preproc dependent application was built on the Palette docker container.The directory should now look like this:

davinci:~/resnet50_example_app/app_configs$ ls genpreproc_200_cvu_cfg_app genpreproc_200_cvu_cfg_params.json

We now have the parameters with the right values, and the application necessary to configure the CVU for our preprocessing step.

Running the configuration application

To run the configuration application, simply run it on the MLSoC with the right input parameters. In the binary directory, run:

davinci:~/resnet50_example_app/app_configs$ sudo ./genpreproc_200_cvu_cfg_app genpreproc_200_cvu_cfg_params.json

Password:

Completed SIMA_GENERIC_PREPROC graph configure

To verify if the configuration was set correctly, you can look at the EV74 log found at: /var/log/simaai_EV74.log. The output should look something like:

Note

Sometimes it can take a few seconds to a minute for the log to update.

davinci:/home/sima/resnet50_example_app/app_configs$ sudo tail -f /var/log/simaai_EV74.log

... function="dump_generic_preproc_params"]----------- dump sima gen preproc -----------

... function="dump_generic_preproc_params"]Input width: 1920

... function="dump_generic_preproc_params"]Input height: 1080

... function="dump_generic_preproc_params"]Output width: 224

... function="dump_generic_preproc_params"]Output height: 224

... function="dump_generic_preproc_params"]scaled width: 224

... function="dump_generic_preproc_params"]scaled height: 224

... function="dump_generic_preproc_params"]input stride: 0

... function="dump_generic_preproc_params"]output stride: 0

... function="dump_generic_preproc_params"]output datatype: 0

... function="dump_generic_preproc_params"]tile width: 32

... function="dump_generic_preproc_params"]tile height: 16

... function="dump_generic_preproc_params"]input depth: 3

... function="dump_generic_preproc_params"]output depth: 3

... function="dump_generic_preproc_params"]quantScale : 53.602257

... function="dump_generic_preproc_params"]qzeroPoint : -14

... function="dump_generic_preproc_params"]batch size : 1

... function="dump_generic_preproc_params"]normalization : true

... function="dump_generic_preproc_params"]rgb interleaved : true

... function="dump_generic_preproc_params"]aspect ratio : false

... function="dump_generic_preproc_params"]mean [RGB]: [0.406000, 0.456000, 0.485000]

... function="dump_generic_preproc_params"]std deviation [RGB]: [0.225000, 0.224000, 0.229000]

... function="dump_generic_preproc_params"]qOffset[0]: 103.529999

... function="dump_generic_preproc_params"]qMultiplier[0]: 0.934244

... function="dump_generic_preproc_params"]qOffset[1]: 116.279999

... function="dump_generic_preproc_params"]qMultiplier[1]: 0.938415

... function="dump_generic_preproc_params"]qOffset[2]: 123.675003

... function="dump_generic_preproc_params"]qMultiplier[2]: 0.917925

... function="dump_generic_preproc_params"]input type : 2

... function="dump_generic_preproc_params"]output type : 0

... function="dump_generic_preproc_params"]scaling type : 3

... function="dump_generic_preproc_params"]padding type : 4

... function="dump_generic_preproc_params"]offset : 150528

... function="select_preproc_kernel"]scaling : BILINEAR

... function="select_preproc_kernel"]input : RGB

The GStreamer string update

Let’s update the previous run_pipeline.sh script to include our new plugin.

#!/bin/bash

# Constants

APP_DIR=/home/sima/resnet50_example_app

DATA_DIR="${APP_DIR}/data"

SIMA_PLUGINS_DIR="${APP_DIR}/../gst-plugins"

SAMPLE_IMAGE_SRC="${DATA_DIR}/golden_retriever_207_rgb.bin"

CONFIGS_DIR="${APP_DIR}/app_configs"

PREPROC_CVU_CONFIG_BIN="${CONFIGS_DIR}/genpreproc_200_cvu_cfg_app"

PREPROC_CVU_CONFIG_JSON="${CONFIGS_DIR}/genpreproc_200_cvu_cfg_params.json"

# Remove any existing temporary files before running

rm /tmp/generic_preproc*.out

# Run the configuration app for generic_preproc

$PREPROC_CVU_CONFIG_BIN $PREPROC_CVU_CONFIG_JSON

# Run the application

export LD_LIBRARY_PATH="${SIMA_PLUGINS_DIR}"

gst-launch-1.0 -v --gst-plugin-path="${SIMA_PLUGINS_DIR}" \

simaaisrc mem-target=1 node-name="my_image_src" location="${SAMPLE_IMAGE_SRC}" num-buffers=1 ! \

simaaiprocesscvu source-node-name="my_image_src" buffers-list="my_image_src" config="$PREPROC_CVU_CONFIG_JSON" name="generic_preproc" ! \

fakesink

To run the application:

davinci:~/resnet50_example_app$ sudo sh run_pipeline.sh

rm: cannot remove '/tmp/generic_preproc*.out': No such file or directory

Completed SIMA_GENERIC_PREPROC graph configure

(gst-plugin-scanner:1565): GLib-GObject-CRITICAL **: 04:12:38.141: g_pointer_type_register_static: assertion 'g_type_from_name (name) == 0' failed

(gst-plugin-scanner:1565): GLib-GObject-CRITICAL **: 04:12:38.141: g_type_set_qdata: assertion 'node != NULL' failed

** Message: 04:12:38.249: Num of chunks 1

** Message: 04:12:38.249: Buffer_name: my_image_src, num_of_chunks:1

Setting pipeline to PAUSED ...

** Message: 04:12:38.258: Filename memalloc = /data/simaai/building_apps_palette/gstreamer/resnet50_example_app/data/golden_retriever_207_rgb.bin

Pipeline is PREROLLING ...

Pipeline is PREROLLED ...

Setting pipeline to PLAYING ...

Redistribute latency...

New clock: GstSystemClock

Got EOS from element "pipeline0".

Execution ended after 0:00:00.001315982

Setting pipeline to NULL ...

Freeing pipeline ...

Note

rm: cannot remove '/tmp/generic_preproc*.out': No such file or directory is expected the first time you run the pipeline.

(gst-plugin-scanner:1565): GLib-GObject-CRITICAL **: messages are expected.

The output dump of the simaaiprocesscvu is located in: /tmp/generic_preproc-001.out for verification in the next step.

Tip

Notice that we set the dump parameter in the resnet50_process_cvu_config file to 1 in order to dump the output of the simaaiprocesscvu plugin.

This will help in 2 ways:

The dump can be used to verify that the output is functionally correct (can also be done through

gdbdebugging, more on that in the debugging section).The dump can be used to feed directly to another plugin using the

simaaisrcplugin to ensure you are debugging one plugin at a time.

Verifying the output

The output of the simaaiprocesscvu is tesselated and quantized.

In order to verify the output against a known reference, we will obtain the original fp32 preprocessed

image and manually quantize it in order to compare the first few pixels.

Note

We can ignore the tesselation in this section due to the fact that we are only comparing the first few pixels.

We will need two things:

A preprocessed fp32 image reference - we will refer to this image as

image_data:That is, our resized, scaled, and normalized fp32 image in NHWC format

Our onnx runtime script already dumps the preprocessed image in NHWC format with the name

golden_retriever_207_preprocessed_rgb_nhwc_fp32.bin

The quantization parameters output from the ModelSDK. In this case:

Quantization scale:

53.59502566491281Found under

plugins[0] → config_params → params → channel_params[0][0]in the\*_mpk.json(inside of.tar.gz).Should match the same value in the

quant_scaleparameter in ourgenpreproc_200_cvu_cfg_params.jsonfile.

Quantization zero point:

-14Found under

plugins[0] → config_params → params → channel_params[0][1]in the\*_mpk.json(inside of.tar.gz).Should match the same value in the

quant_zpparameter in ourgenpreproc_200_cvu_cfg_params.jsonfile.

In order to quantize, scale and normalize the pixels from our frame (image_data), we need to apply the following formula to the pixels:

quantized_frame_from_reference = (image_data_pixel - qOffset) * qMultiplier + quantized_zero_point

Where qOffset and qMultiplier can be found in the output of /var/log/simaai_EV74.log when we set the CVU parameters using the configuration application.

You can also find the formulas in the reference SIMA_GENERIC_PREPROC.

Going back to the reference application debug console, if we look at the first 4 output pixels of preprocess_image() and apply the formula, we get:

np.round((resized_image.flatten()[:3] - [103.529999, 116.279999, 123.675003]) * [0.934244, 0.938415, 0.917925] - 14)

array([-34., -59., -89.], dtype=int8)

If we look at the first 3 pixels of /tmp/generic_preproc-001.out it should match the above:

davinci:~/resnet50_example_app$ hexdump -C -n 3 /tmp/generic_preproc-001.out

00000000 de c5 a7 |....|

00000004

Interpreting the values, as int8 as they were saved, we can then verify that the pixels look correct:

0xde=>0010 1010=>-340xc5=>0100 0101=>-590xa7=>0101 0011=>-89

Conclusion and next steps

In this section, we:

Went through the steps necessary to chose the CVU graph we want to run, create its configuration application, and how to copy it to the MLSoC

Went through how to set the JSON configuration file for the CVU graph we are running, along with a description of each parameter value

Ran and verified the output given the

dumpof the plugin as configured in the JSON and the output from our python reference application

In the next section, we will add the MLA simaaiprocessmla plugin in order to perform inference.