ML application overview

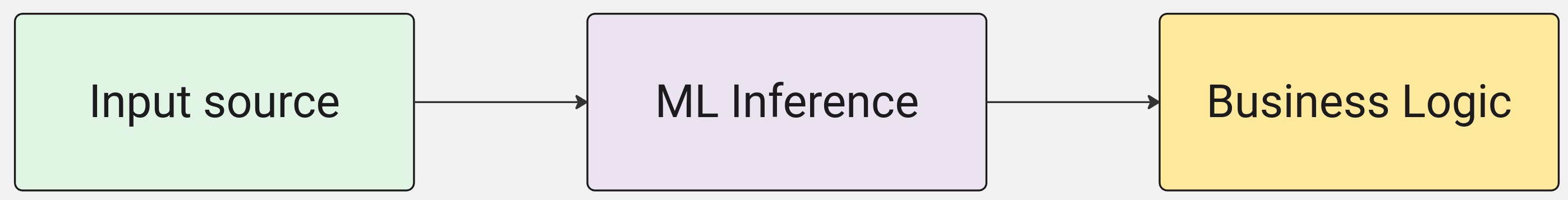

In this section we will look at the typical components of an ML application, define the classification application that we will use throughout this guide, and map the application stages to various HW IPs of the MLSoC. As shown below, even simple applications require multiple steps, and a solid grasp of the architecture is critical for developing on MLSoC. Let’s first outline a simple pipeline architecture before beginning development:

A typical ML application

If we were to look at a very simple classification ML application, it would look something like this:

Input Source: Any input into the application, could be an RTSP stream, a video file, image files, audio stream, etc.

ML Inference: Running the ML model to classify the input in some way.

Business Logic: Logic in response to the ML inference such as saving/displaying information, PLC communication, network storage, etc.

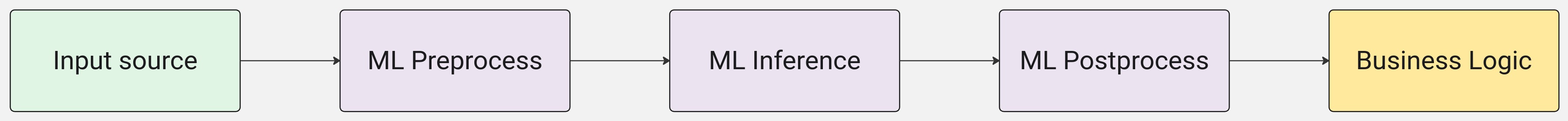

ML Preprocessing and Postprocessing

In reality, ML models require very specific preprocessing and postprocessing steps. If we break this generic case down a bit more, we get the following:

ML Preprocess: Is the specific preprocessing required for the ML model being deployed (resizing, normalization, etc.).

ML Inference: Is the actual NN model inference processing.

ML Postprocess: Is the post processing required for the output of the ML Inference step (softmax, argmax, box-decoding, etc.).

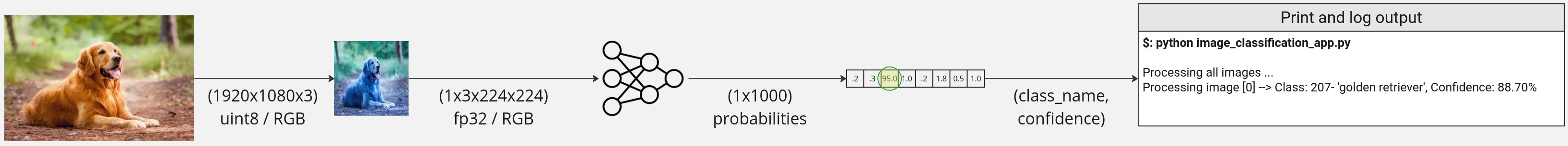

ResNet50 classification application

For this guide, we will be using a very simple classification application that will tell us the object type and confidence given any image:

This application pipeline steps will be as follows:

Image(s) Source: Will

readall images from a local directory.ResNet50 Preprocessing: Will

resizethe image to 224x224 (height x width),scaleandnormalizethe image values to be between 0.0 -> 1.0.ResNet50 Inference: Will run the resized and scaled image through ResNet50 NN

inference.Resnet50 Postprocessing: Will perform

argmaxon all the output values and pick the highest confidence category.Business Logic: Will

printthe category, and the confidence score for each result received.

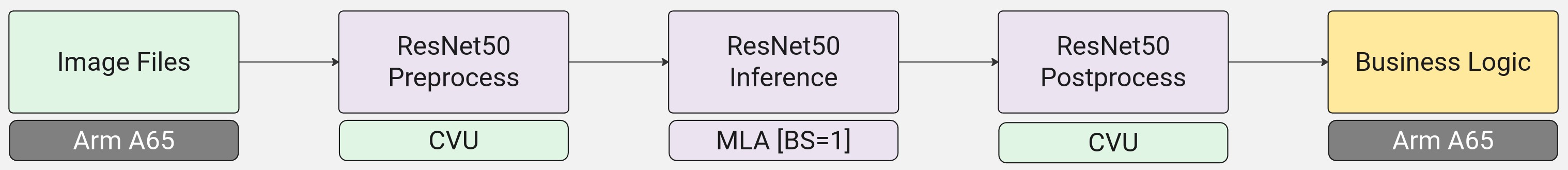

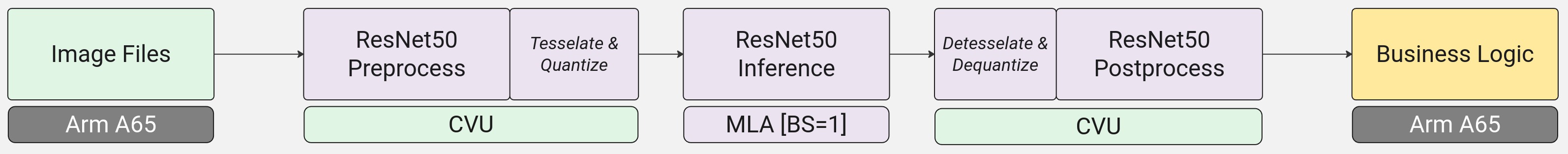

Mapping the ResNet50 application to the MLSoC

Now that we understand the end-to-end application and it’s components, we can review how to map the different application stages to the MLSoC.

Image(s) Source: Will map to the

Arm A65will read images from storage.ResNet50 Preprocessing: Will use one of SiMa.ai’s CVU Graph Library (CVU Graphs) to perform

resize,scalingandnormalizationin one go on theEV74 CVU.ResNet50 Inference: Will run the resized and scaled image through the

MLAfor performant and efficientinference.Resnet50 Postprocessing: Will use one of SiMa.ai’s CVU Graph Library (CVU Graphs) to perform

detesselation and dequantization(more on this later) on theEV74 CVU.Business Logic: Will print the output on

Arm A65using regularstd::cout.

Tesselation and Quantization

Lastly, the MLA on the MLSoC has some preprocessing and postprocessing requirements of its own (quantization and tesselation). SiMa.ai EV74 CVU graphs are designed and optimized to perform these operations for developers, but they are important to understand as one develops/debugs end-to-end applications.

Tesselation/Detesselation: MLA requires a specific memory layout for all of its input/output feature maps. Converting an input/output to/from this memory layout is referred to as

tesselationin this guide.Quantization/Dequantization: MLA requires inputs to be in integer data type. Inputs/outputs must go through

quantizationordequantizationin order to convert input/output feature maps from float->integer (MLA input) or integer->float (MLA output).

Conclusion and next steps

In this section, we:

Reviewed the processing units of the MLSoC and the Palette SW stack that uses GStreamer in order to execute end-to-end applications.

Reviewed the high level steps of porting an ML application to the MLSoC.

Reviewed what a typical ML application looks like and how it maps to the MLSoC.

Now that we have a clear understanding of the application we want to run on the MLSoC, we are now ready to take steps to build an end-to-end application using GStreamer.

The first step will be to take a look at our reference ResNet50 application that we can run on our host machine using onnxruntime.

This will serve as a baseline for us to port over to the MLSoC.