ModelSDK - Compiling ML Models

The first step in developing any pipeline, is to compile the ML models so that they can run on the MLA. In order to compile the ResNet50 model in our example application, we will use the script below on the Palette docker container using ModelSDK APIs.

1#**************************************************************************

2#|| SiMa.ai CONFIDENTIAL ||

3#|| Unpublished Copyright (c) 2023-2024 SiMa.ai, All Rights Reserved. ||

4#**************************************************************************

5# NOTICE: All information contained herein is, and remains the property of

6# SiMa.ai. The intellectual and technical concepts contained herein are

7# proprietary to SiMa and may be covered by U.S. and Foreign Patents,

8# patents in process, and are protected by trade secret or copyright law.

9#

10# Dissemination of this information or reproduction of this material is

11# strictly forbidden unless prior written permission is obtained from

12# SiMa.ai. Access to the source code contained herein is hereby forbidden

13# to anyone except current SiMa.ai employees, managers or contractors who

14# have executed Confidentiality and Non-disclosure agreements explicitly

15# covering such access.

16#

17# The copyright notice above does not evidence any actual or intended

18# publication or disclosure of this source code, which includes information

19# that is confidential and/or proprietary, and is a trade secret, of SiMa.ai.

20#

21# ANY REPRODUCTION, MODIFICATION, DISTRIBUTION, PUBLIC PERFORMANCE, OR PUBLIC

22# DISPLAY OF OR THROUGH USE OF THIS SOURCE CODE WITHOUT THE EXPRESS WRITTEN

23# CONSENT OF SiMa.ai IS STRICTLY PROHIBITED, AND IN VIOLATION OF APPLICABLE

24# LAWS AND INTERNATIONAL TREATIES. THE RECEIPT OR POSSESSION OF THIS SOURCE

25# CODE AND/OR RELATED INFORMATION DOES NOT CONVEY OR IMPLY ANY RIGHTS TO

26# REPRODUCE, DISCLOSE OR DISTRIBUTE ITS CONTENTS, OR TO MANUFACTURE, USE, OR

27# SELL ANYTHING THAT IT MAY DESCRIBE, IN WHOLE OR IN PART.

28#

29#**************************************************************************

30

31import cv2

32import numpy as np

33import pickle as pkl

34

35from afe.apis.defines import QuantizationParams, quantization_scheme, CalibrationMethod

36from afe.apis.loaded_net import load_model

37from afe.apis.release_v1 import get_model_sdk_version

38from afe.ir.tensor_type import ScalarType

39from afe.load.importers.general_importer import onnx_source

40from afe.core.utils import convert_data_generator_to_iterable

41

42from typing import Dict

43from pathlib import Path

44from afe import DataGenerator

45

46np.random.seed(9)

47

48# Constants

49ROOT_PATH = Path(__file__).parent.resolve()

50MODEL_INPUT_NAME = "input"

51MAX_DATA_SAMPLES = 50

52MODELS_PATH = ROOT_PATH/"../../models"

53DATA_PATH = ROOT_PATH/"../../data/"

54MODEL_PATH = MODELS_PATH/"resnet50_model.onnx"

55LABELS_PATH = DATA_PATH/"imagenet_labels.txt"

56CALIBRATION_SET_PATH = DATA_PATH/"openimages_v7_images_and_labels.pkl"

57

58# Dataset and preprocessing #

59def create_imagenet_dataset(num_samples: int = 1) -> Dict[str, DataGenerator]:

60 """

61 Creates a data generator with the structure

62 { 'images': DataGenerator of image arrays

63 'labels': DataGenerators of labels }

64 """

65 dataset_path = CALIBRATION_SET_PATH

66

67 with open(dataset_path, 'rb') as f:

68 dataset = pkl.load(f)

69

70 images_and_labels = {'images': dataset['data'][:num_samples],

71 'labels': dataset['target'][:num_samples]}

72

73 return images_and_labels

74

75def preprocess(image, skip_transpose=True, input_shape: tuple = (224, 224), scale_factor: tuple = 255.0):

76 mean = [0.485, 0.456, 0.406]

77 stddv = [0.229, 0.224, 0.225]

78

79 # val224 images come in CHW format, need to transpose to HWC format

80 if not skip_transpose:

81 image = image.transpose(1, 2, 0)

82

83 # Resize, color convert, scale, normalize

84 image = cv2.resize(image, input_shape)

85 image = image / scale_factor

86 image = (image - mean) / stddv

87

88 return image

89

90# Function to post-process the output

91def postprocess_output(output: np.ndarray):

92 probabilities = output[0][0]

93 max_idx = np.argmax(probabilities)

94 return max_idx, probabilities[max_idx]

95

96# Get Model SDK version

97sdk_version = get_model_sdk_version()

98print(f"Model SDK version: {sdk_version}")

99

100# Model information

101input_name, input_shape, input_type = ("input", (1, 3, 224, 224), ScalarType.float32)

102input_shapes_dict = {input_name: input_shape}

103input_types_dict = {input_name: input_type}

104

105# Load the ONNX model

106importer_params = onnx_source(str(MODEL_PATH), input_shapes_dict, input_types_dict)

107loaded_net = load_model(importer_params)

108

109# Create the calibration dataset

110images_and_labels = create_imagenet_dataset(num_samples=MAX_DATA_SAMPLES)

111

112# Create a datagenerator from it and map the preprocessing function

113images_generator = DataGenerator({MODEL_INPUT_NAME: images_and_labels["images"]})

114images_generator.map({MODEL_INPUT_NAME: preprocess})

115

116# Setup the quantization parameters and quantize using MSE and INT8

117quant_configs: QuantizationParams = QuantizationParams(calibration_method=CalibrationMethod.from_str('min_max'),

118 activation_quantization_scheme=quantization_scheme(asymmetric=True, per_channel=False, bits=8),

119 weight_quantization_scheme=quantization_scheme(asymmetric=False, per_channel=True, bits=8))

120

121sdk_net = loaded_net.quantize(convert_data_generator_to_iterable(images_generator),

122 quant_configs,

123 model_name="quantized_resnet50",

124 arm_only=False)

125

126# Execute the quantized net with ImageNet samples

127with open(LABELS_PATH, "r") as f:

128 imagenet_labels = [line.strip() for line in f.readlines()]

129

130for idx in range(6):

131 sdk_net_output = sdk_net.execute(inputs={"input": images_generator[idx]["input"]})

132 inference_label, inference_result = postprocess_output(sdk_net_output)

133 reference_label = images_and_labels["labels"][idx]

134

135 print(f"[{idx}] --> {imagenet_labels[inference_label]} / {reference_label} -> {inference_result:.2%}")

136

137# Load image -> preprocess -> inference -> postprocess -> print ; 207 is the expected label

138print("Inference on a happy golden retriever (class 207) ..")

139dog_image = cv2.imread(str(DATA_PATH/"golden_retriever_207.jpg"))

140dog_image = cv2.cvtColor(dog_image, cv2.COLOR_BGR2RGB)

141pp_dog_image = np.expand_dims(preprocess(dog_image), axis=0).astype(np.float32)

142sdk_net_output = sdk_net.execute(inputs={"input": pp_dog_image})

143inference_label, inference_result = postprocess_output(sdk_net_output)

144print(f"[{idx}] --> {imagenet_labels[inference_label]} / 207 -> {inference_result:.2%}")

145

146# Save model

147sdk_net.save(model_name="quantized_resnet50", output_directory=str(MODELS_PATH))

148

149# Compile the quantized net and generate LM file and MPK JSON file

150print("Compiling the model ..")

151sdk_net.compile(output_path=str(MODELS_PATH/"compiled_resnet50"))

To compile the model, you will need the following directory structure:

sima-user@docker-image-id$ tree -L3

.

├── data

│ ├── golden_retriever_207.jpg

│ ├── imagenet_labels.txt

│ └── openimages_v7_images_and_labels.pkl

├── models

│ ├── download_resnet50.py

│ └── resnet50_model.onnx

├── README.md

├── requirements.txt

└── src

├── modelsdk_quantize_model

│ └── resnet50_quant.py

└── x86_reference_app

└── resnet50_reference_classification_app.py

Within the docker Palette container, run the script:

sima-user@docker-image-id$ python src/modelsdk_quantize_model/resnet50_quant.py

/usr/local/lib/python3.10/site-packages/tensorflow/python/keras/engine/training_arrays_v1.py:37: UserWarning: A NumPy version >=1.23.5 and <2.3.0 is required for this version of SciPy (detected version 1.23.2)

from scipy.sparse import issparse # pylint: disable=g-import-not-at-top

Model SDK version: 1.4.0

Running calibration ...DONE

...

Running quantization ...DONE

[0] --> 817: 'sports car, sport car', / ['Clothing', 'Person', 'Car', 'Wheel'] -> 47.84%

[1] --> 248: 'Eskimo dog, husky', / ['Dog'] -> 51.76%

[2] --> 668: 'mosque', / ['Person'] -> 93.72%

[3] --> 515: 'cowboy hat, ten-gallon hat', / ['Sun hat', 'Cowboy hat', 'Fedora', 'Clothing'] -> 98.82%

[4] --> 113: 'snail', / ['Animal', 'Snail'] -> 98.82%

[5] --> 517: 'crane', / ['Land vehicle'] -> 98.82%

Inference on a happy golden retriever (class 207) ..

[5] --> 207: 'golden retriever', / 207 -> 98.82%

Compiling the model ..

sima-user@docker-image-id$ tree -L 3

.

├── data

│ ├── golden_retriever_207.jpg

│ ├── imagenet_labels.txt

│ └── openimages_v7_images_and_labels.pkl

├── models

│ ├── compiled_resnet50

│ │ └── quantized_resnet50_mpk.tar.gz

│ ├── download_resnet50.py

│ ├── quantized_resnet50.sima

│ ├── quantized_resnet50.sima.json

│ └── resnet50_model.onnx

├── README.md

├── requirements.txt

└── src

├── modelsdk_quantize_model

│ └── resnet50_quant.py

└── x86_reference_app

└── resnet50_reference_classification_app.py

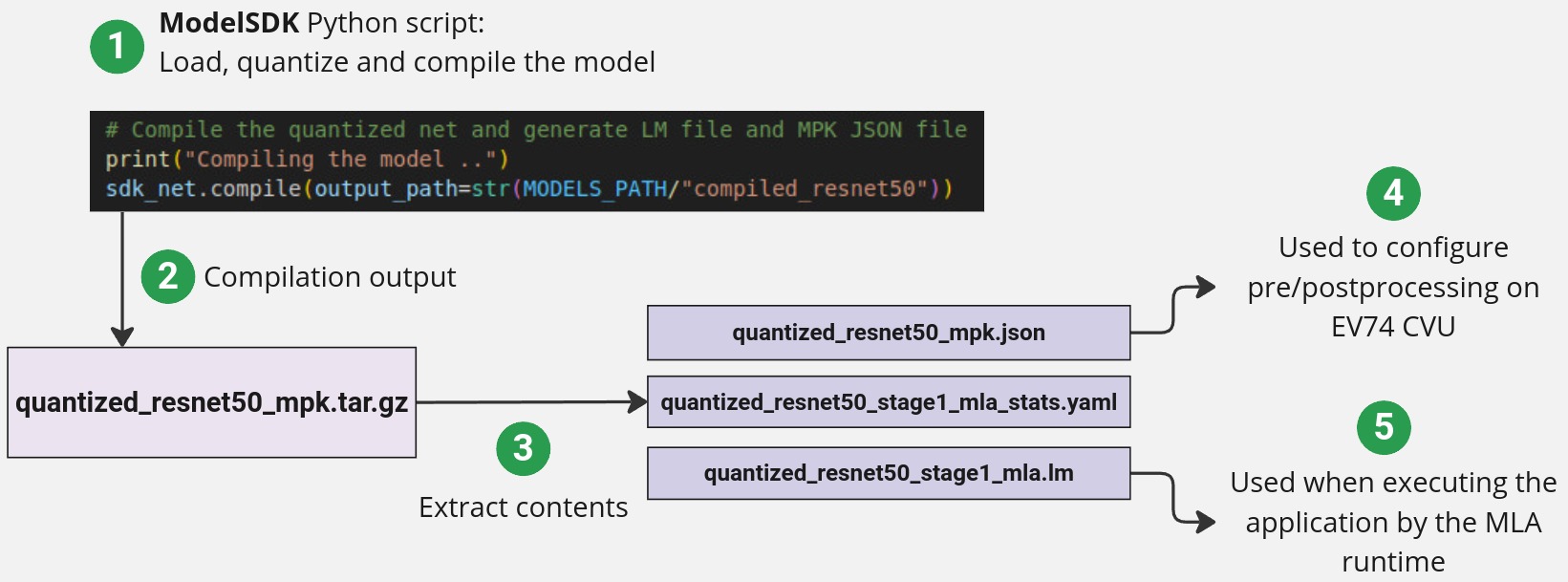

The output of a compiled model in the ModelSDK is a tar.gz model that contains the compiled model, metadata in the form of a _mpk.json file and a stats file.

Both the .lm compiled models and the *_mpk.json files will be used throughout this guide as you build the pipeline. For more information please refer to the ModelSDK section.

Conclusion and next steps

In this section, we:

Reviewed how to take an existing ResNet50 ONNX model and load it, quantize it, test it and compile it using the ModelSDK

How we will use the output

*.tar.gzand its contents to develop an application and enable the runtime to run the model.

In the next section we will review how to program the various HW IPs found on the MLSoC before diving into our development example.